Homebrew developer runs real-time ray tracing test on 1994 Sega Saturn — ancient hardware's untapped power revealed, more refinements to come

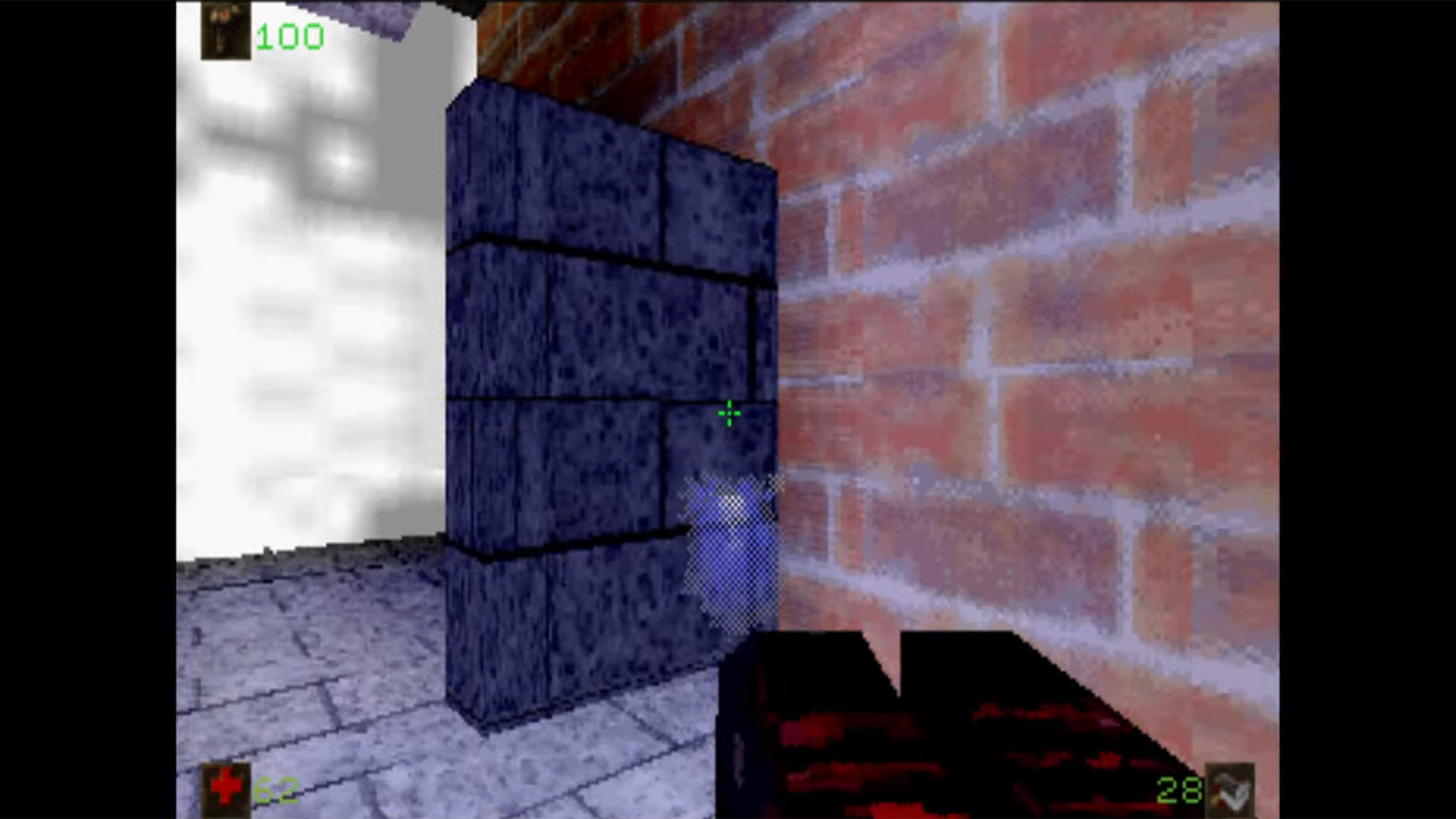

First-person view shows real-time shadows and a gun’s muzzle flash lighting up a small room.

Get 3DTested's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

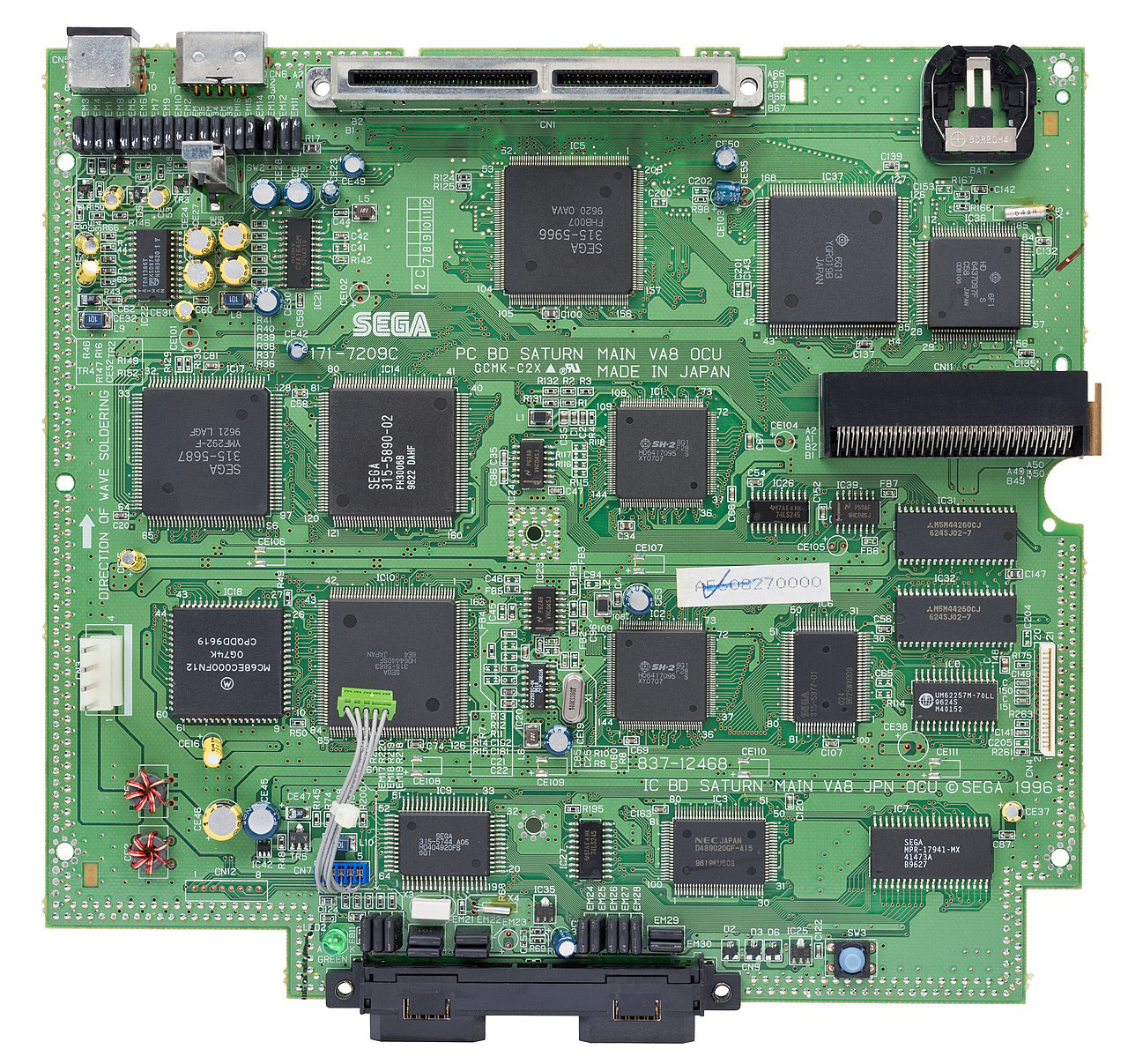

A recently published video shows real-time raytraced shadows in a game environment running within the improbable confines of a mid-1990s console. The Sega Saturn was notoriously complex and difficult to optimize games for when it was introduced. However, this video shows that there are still surprises being wrung out of Sega’s dual-CPU and dual-video-processor system, which debuted in late 1994. Match that PSX!

Real-time raytracing began to become a mainstream feature of gaming graphics on PCs with the launch of Nvidia’s first RTX 20-series graphics cards based on Turing GPUs (late 2018). Some would argue it took another generation and the wide adoption of modern upscaling tech to actually see mainstream raytraced games' momentum. Thus, seeing any kind of real-time raytracing in action on a 1994-vintage console is fascinating.

XL2 introduces their real-time raytracing on the Saturn video clip by saying, “Here is a raytracing test in a small room. The function is pretty simple and could be optimized further: I simply test all the vertices using the BSP [Binary Space Partitioning].” Moreover, it is explained that several other optimizations are in place: the engine only tests the vertices of 3D objects, with only a quarter of them updated per frame, and light sources are kept to a minimum.

The Saturn homebrew dev hints that the demo we see may be improved in due course. They indicate that a number of paths to refinement are available, some of which would be “super easy,” while others may need “a bit more maths.” So stay tuned to XL2’s channel if you like what you see.

Some Sega Saturn history

Sega’s Saturn was the iconic game company’s first 32-bit console from the ground up, designed to excel at 2D arcade ports, yet still capable of running emerging 3D-centric titles.

Launched in Nov 1994 in Japan / May 1995 in the U.S., it would become available at roughly the same time as the original Sony PlayStation (PSX, PS1) in both these key territories. In general, the PSX was weaker at 2D, but offered more balanced, dev-friendly 3D capabilities. Both the Saturn and PSX pre-dated the Nintendo 64, still cartridge-based but a strong 3D performer, by a year to a year and a half.

Sega followed up the Saturn with its awesome Dreamcast, which would be its final console hardware release, giving way to the Sony / Nintendo / Microsoft era.

Get 3DTested's best news and in-depth reviews, straight to your inbox.

Follow 3DTested on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

-

bit_user People have been using ray tracing for hit-detection for ages. This isn't much different. It's so low resolution that it's really not comparable to any form of modern ray tracing.Reply

Furthermore, it's happening in a very small demo environment with very simple geometry, and yet you can see a serious lag, as it gradually updates all of the vertices. Definitely doesn't seem like it would scale up well to an entire game. -

Lamarr the Strelok Reply

Never apologize for quoting elegant prose of old.Jabberwocky79 said:But can it run Crysis?

(Sorry, someone had to say it). -

Drunk Ukrainian Reply

I was there, Gandalf, 3000 years ago.Lamarr the Strelok said:Never apologize for quoting elegant prose of old. -

Lamarr the Strelok Reply

Just to remind people Nvidia didn't invent ray tracing. They put a lotta money into it and dumb amounts of marketing for it but that's about it for me,thankfully. RT is the equivalent of paying $200 extra for a gpu card for anti aliasing or any number of the other settings we already have on gpu's.bit_user said:People have been using ray tracing for hit-detection for ages. This isn't much different. It's so low resolution that it's really not comparable to any form of modern ray tracing.

Furthermore, it's happening in a very small demo environment with very simple geometry, and yet you can see a serious lag, as it gradually updates all of the vertices. Definitely doesn't seem like it would scale up well to an entire game. -

bit_user Reply

I first dabbled with POV-Ray back in the early 1990's, on a 386 (plus Cyrix FasMath math coprocessor).Lamarr the Strelok said:Just to remind people Nvidia didn't invent ray tracing. -

bit_user Reply

It's misleading to post that clip with no explanation. That is not an example of realtime ray tracing. It was a clip rendered non-realtime using Lightwave 3D. It is simply the lead-in to the same creator's Lightwave 3D tutorial, which explains how to model & render such content.call101010 said:H-ftFdH7heU

-xcREWLPhsM View: https://www.youtube.com/watch?v=-xcREWLPhsM

I realize the clip could've explained itself a bit better, but you also might've exercised a bit of skepticism, if you thought any Amiga could actually render stuff like that in realtime. -

JamesJones44 I'm curious what output resolution was used. The Saturn had a range of output resolutions that could be used for NTSC and PALReply -

bit_user Reply

Well, just eyeballing it, I'd guess something like 320x240:JamesJones44 said:I'm curious what output resolution was used. The Saturn had a range of output resolutions that could be used for NTSC and PAL

p8rQ47YRbFs View: https://www.youtube.com/watch?v=p8rQ47YRbFs

However, the ray tracing isn't happening in screen space. Apparently, not even in texture space, either. According to the description, the demo is computing the lighting coefficient for 1/4th of the vertices, in each frame. So, ray intersection tests are only being performed between these vertices and the light source(s). Then gouraud shading is being used to interpolate the lighting coefficients between vertices.

Remember, this was back in the days of fixed-function rendering pipelines. It might not even have been possible to dynamically compute a shadow map. But you could do per-vertex lighting.