Phison demos 10X faster AI inference on consumer PCs with software and hardware combo that enables 3x larger AI models — Nvidia, AMD, MSI, and Acer systems demoed with aiDAPTIV+

Make ordinary PCs run extraordinary things.

Get 3DTested's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

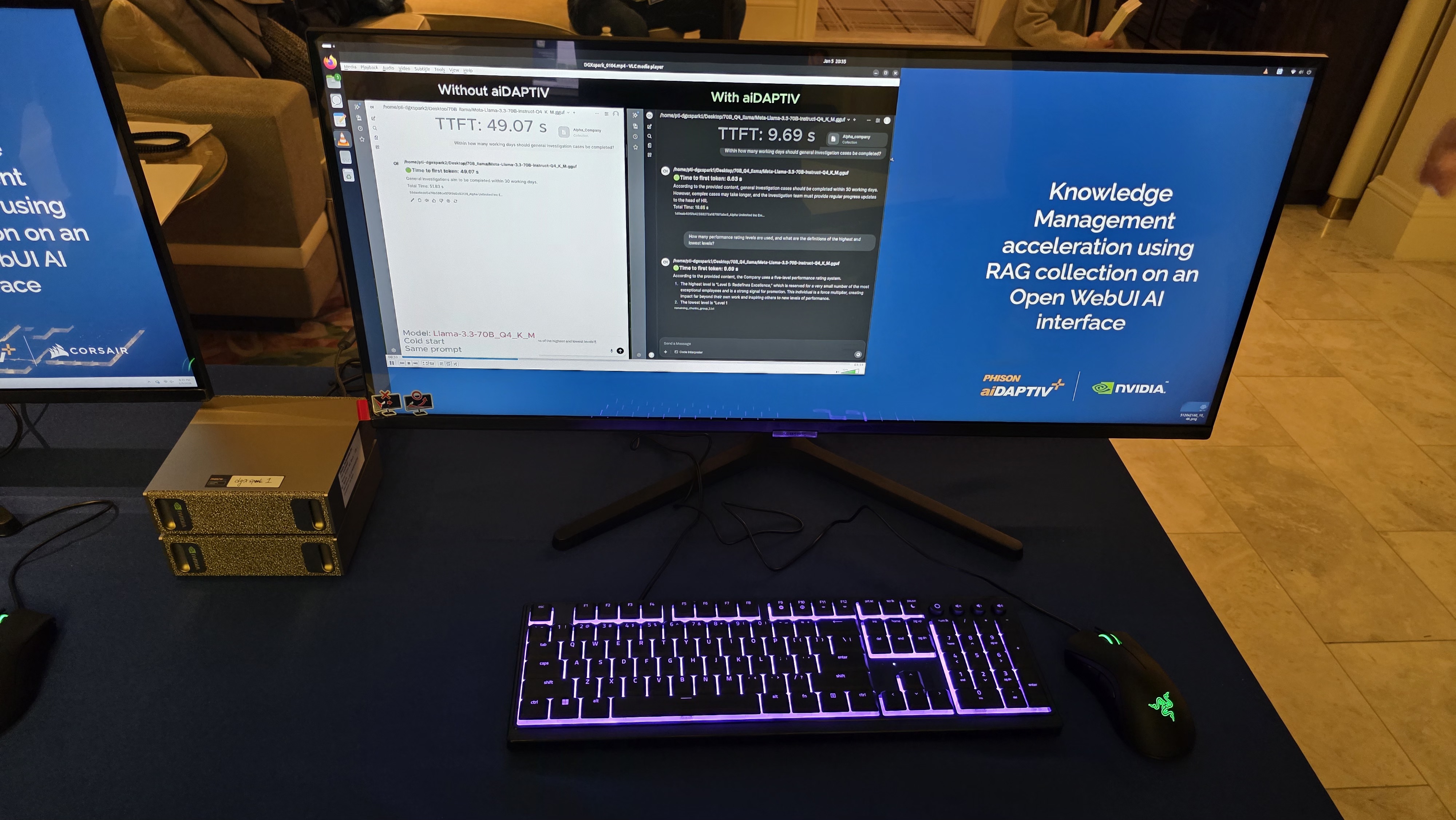

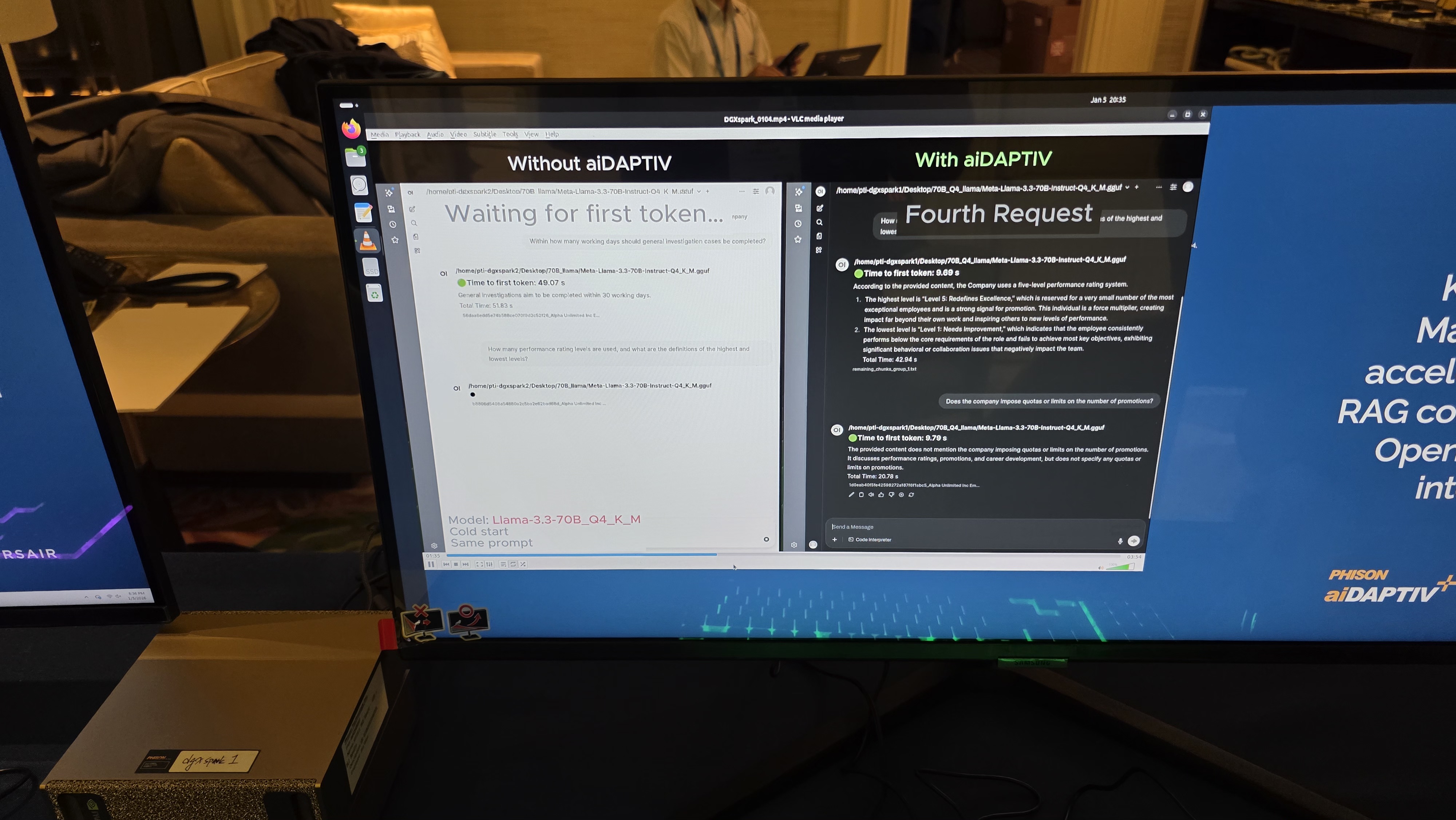

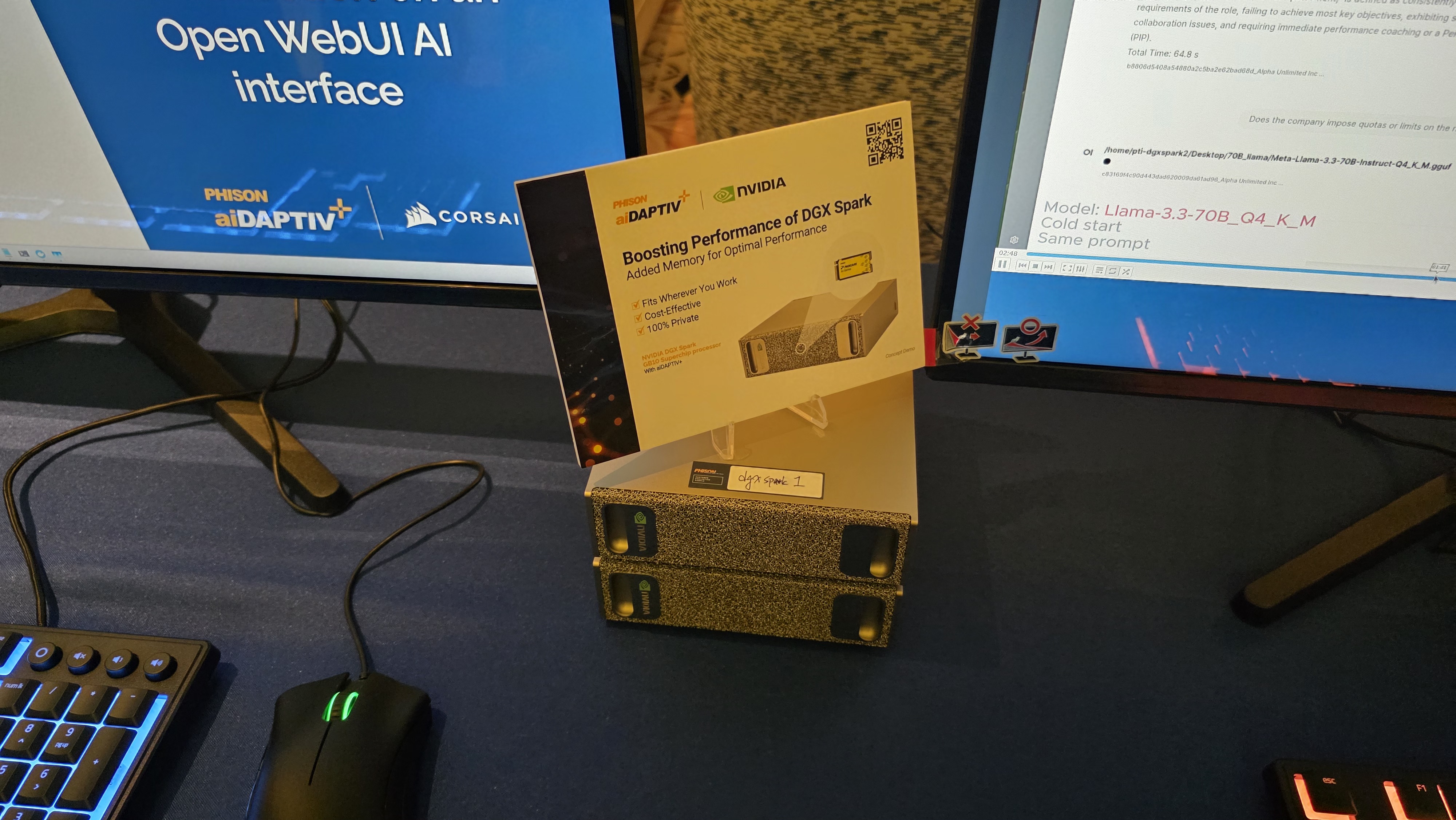

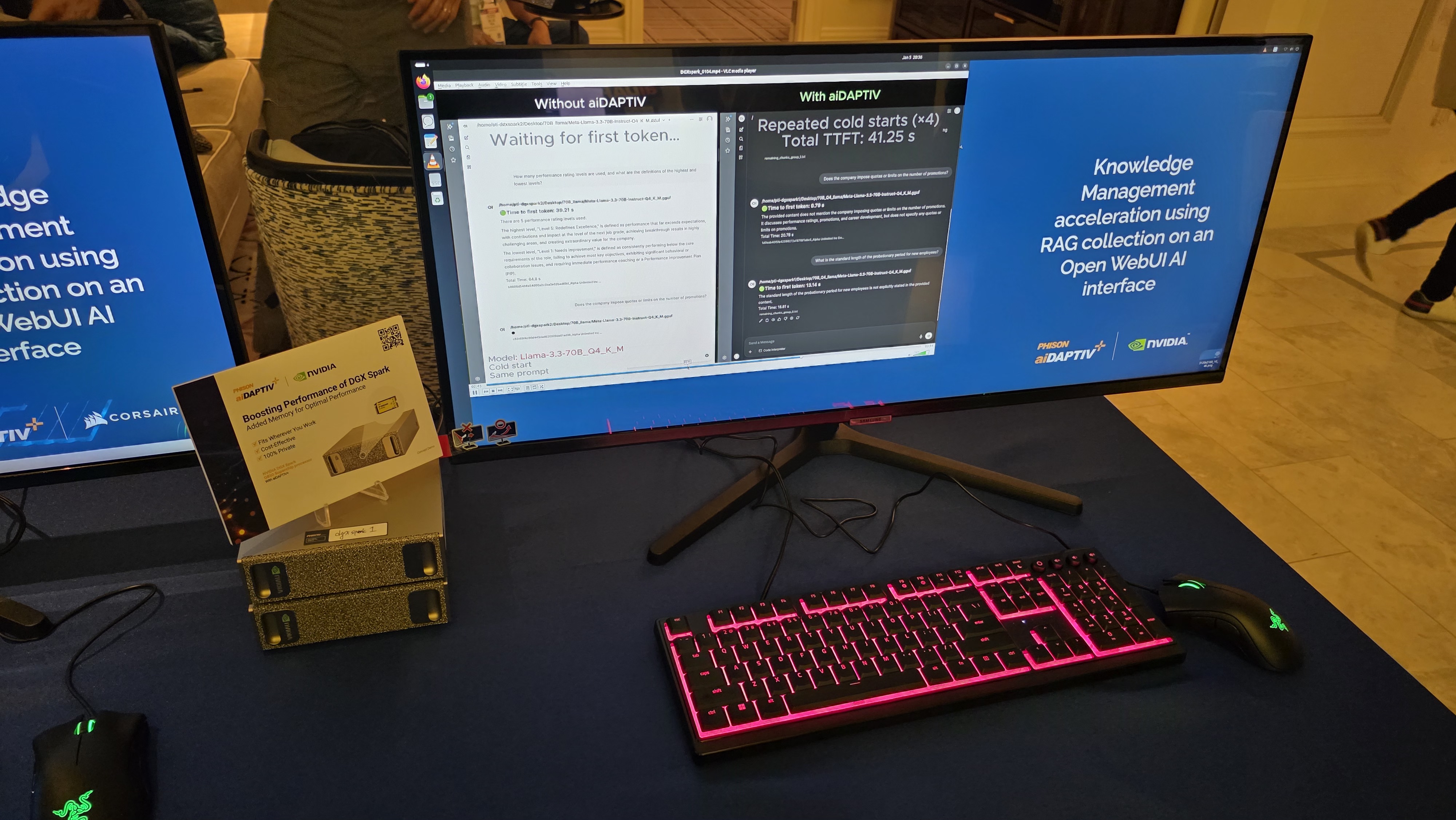

At CES 2026, Phison demonstrated consumer PCs with its aiDAPTIV+ software/hardware combo running AI inference up to ten times faster than without its specialized suite of technologies. When Phison introduced its aiDAPTIV+ technology in mid-2024, it essentially transformed NAND memory into a managed memory tier alongside DRAM to enable large AI models to train or run on systems that did not have enough DDR5 and/or HBM memory, but at the time, it was merely a proof-of-concept aimed at enterprises. By early 2026, the positioning of the technology has changed, and now Phison sees it as an enabler of AI inference models on client PCs, which broadly increases the use-case.

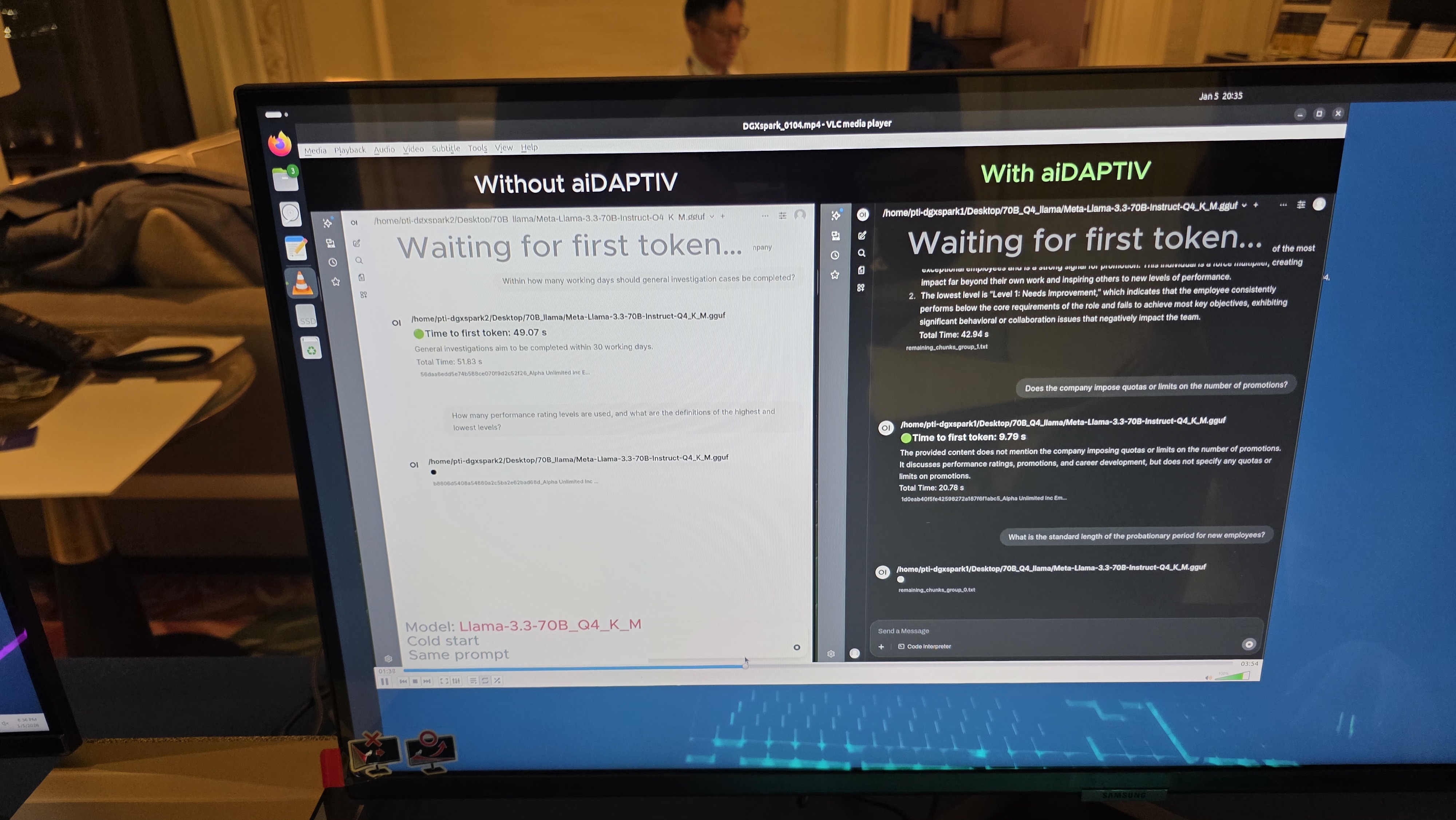

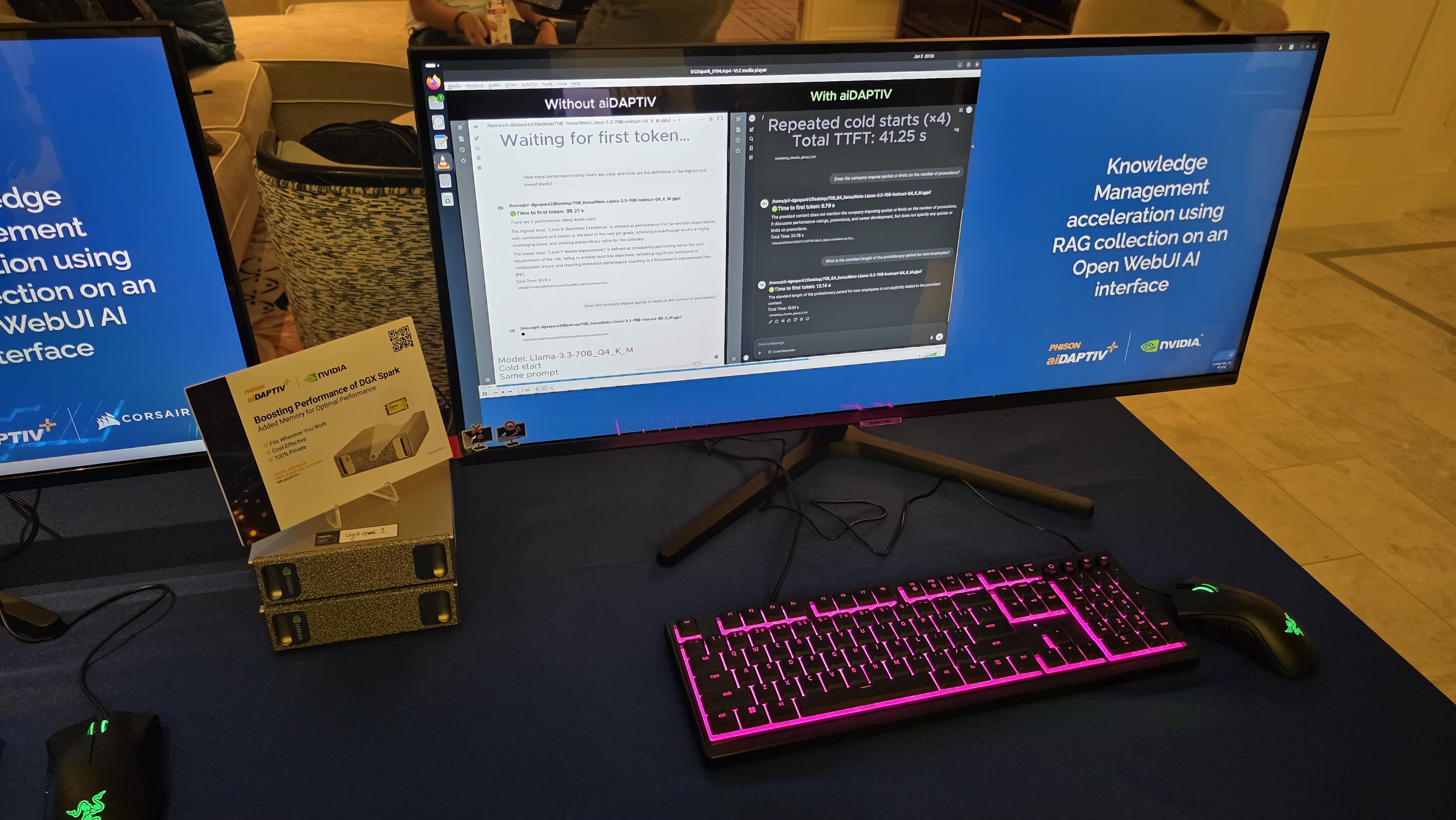

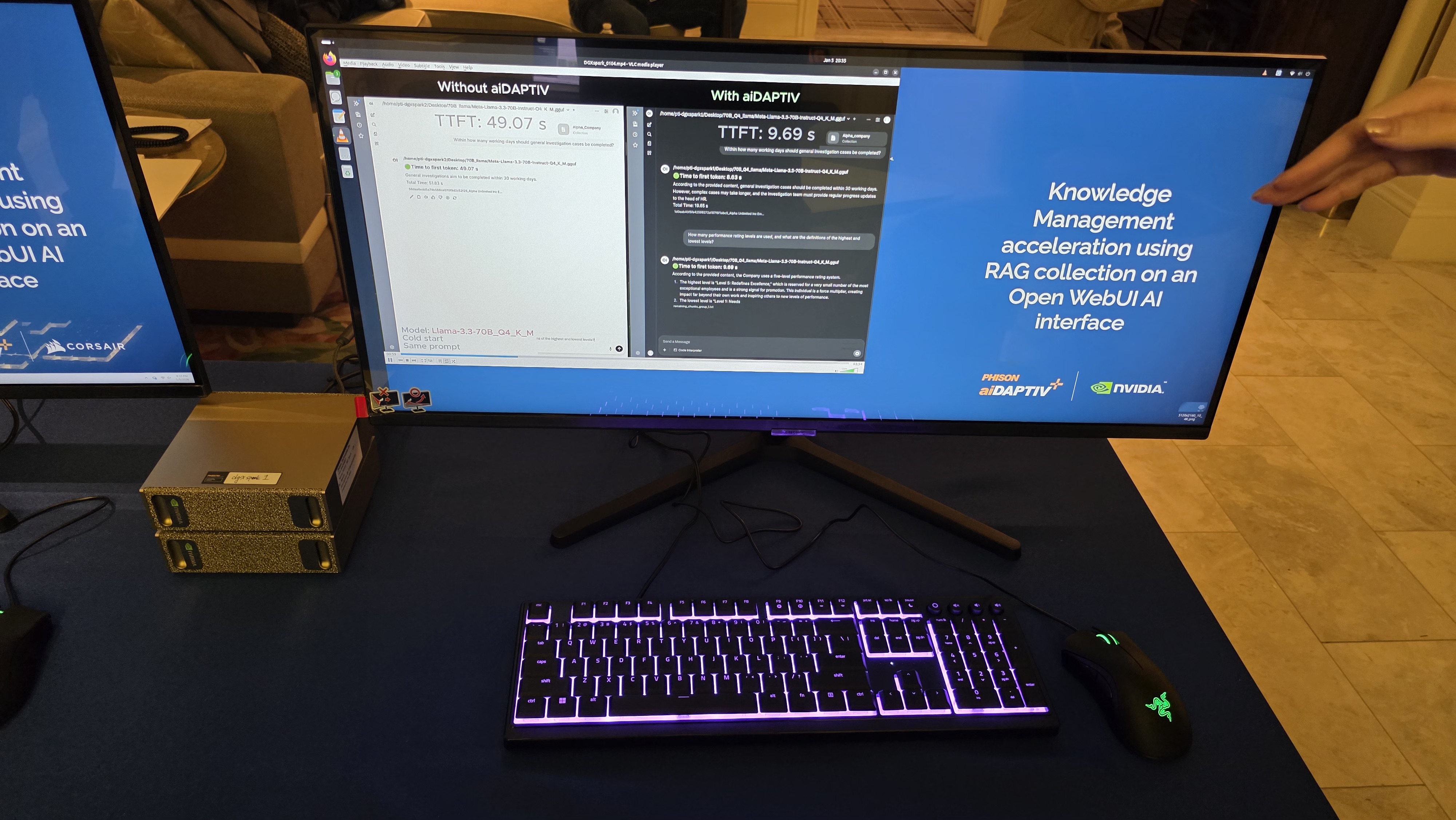

Normally, when tokens no longer fit into the GPU's key-value (KV) cache during inference, older KV entries are evicted, so if/when the model needs those tokens again (in cases of long context or agent loops), the GPU must recompute them from scratch, which makes AI inference inefficient on systems with limited memory capacity. However, with a system equipped with Phison's aiDAPTIV+ stack, tokens that no longer fit into the GPU's KV cache are written to flash and retained for future reuse, which can reduce memory requirements in many cases and dramatically decrease the time to first token, which is the time it takes to produce the first word of a response.

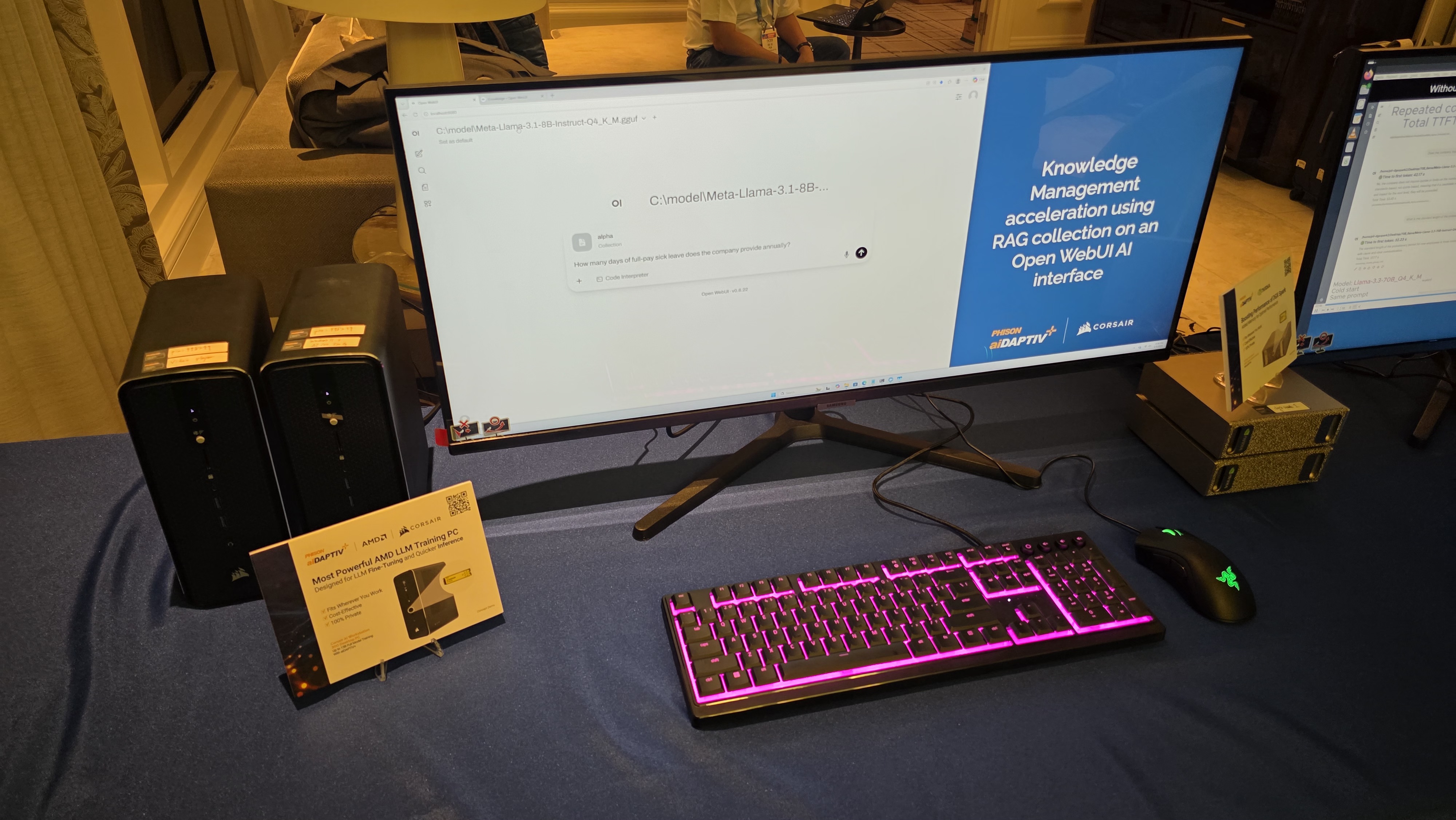

The renewed focus of Phison's aiDAPTIV+ platform is designed to let ordinary PCs with entry-level or even integrated GPUs handle far larger AI models than their installed DRAM would normally permit. Bringing large-model inference and limited training to desktops and notebooks may be valuable for developers and small businesses that cannot afford to make big investments in AI at the moment, so Phison has a list of aiDAPTIV+ testing partners with systems featuring the technology at CES 2026, such as Acer, Asus, Corsair, Emdoor, MSI, and even Nvidia. For example, Acer has managed to run an gpt-oss-120b model on an Acer laptop with just 32GB of memory, which opens doors to a number of applications.

According to Phison's internal testing, aiDAPTIV+ can accelerate inference response times by up to 10 times, as well as reduce power consumption and improve Time to First Token on notebook PCs. Obviously, the larger the model and the longer the context, the higher the gain, so the technology is especially relevant for Mixture of Experts models and agentic AI workloads. Phison claims that a 120-billion-parameter MoE model can be handled with 32 GB of DRAM, compared with roughly 96 GB required by conventional approaches, because inactive parameters are kept in flash rather than resident in main memory.

Given that Phison's aiDAPTIV+ stack involves an AI-aware SSD (or SSDs) based on an advanced controller from Phison, special firmware, and software, the implementation of the technology should be pretty straightforward. This is important for PC makers, value-added resellers, and small businesses interested in using this capability, so it is reasonable to expect a number of them to actually use this technology with their premium models aimed at developers and power users. For Phison, this means usage of their controllers as well as added revenue from selling the aiDAPTIV+ stack to partners.

Follow 3DTested on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Get 3DTested's best news and in-depth reviews, straight to your inbox.

-

DougMcC Reply

Came to point out the same error but you beat me to it.nichrome said:I do hope the phison does not dramatically increase time to first token. -

gc9 Earlier article on the previous target niche (specializing models with smaller, slower, but private on-premises hardware than specializing in cloud)Reply

https://www.3dtested.com/pc-components/cpus/phisons-new-software-uses-ssds-and-dram-to-boost-effective-memory-for-ai-training-demos-a-single-workstation-running-a-massive-70-billion-parameter-model-at-gtc-2024 -

bit_user Reply

Well, embedding it in the SSD controller's firmware is one way to make sure they stay in the value chain. However, I do genuinely wonder how much benefit it gets from being there. SSDs don't have particularly fast CPU cores, and so I'd expect that the caching layer could be implemented purely in software on the host CPU, without much degradation of performance.The article said:Given that Phison's aiDAPTIV+ stack involves an AI-aware SSD (or SSDs) based on an advanced controller from Phison, special firmware, and software

I guess the main benefit of integrating into the SSD is that it could occur as part of the normal FTL (Flash Translation Layer), rather than needing to introduce an entirely new layer for it. However, there are even some datacenter SSDs that essentially allow the host processor to do all the FTL stuff. -

JarredWaltonGPU Reply

So there are two use cases for aiDAPTIV. Inference uses the SSD as a cache for computed KV cache values, and in some scenarios can provide massive benefits. Basically, if you are doing inference and run out of VRAM, older KV calculations get dumped and lost. If you have a longer context length, the KV pairs may need to be recomputed, and it's actually much faster to just retrieve them from a fast SSD (at 7~10 GB/s) than to recompute — even on stuff like NVIDIA H200. (I don't think any testing has been done with B200 / B300 yet.) This mostly results in much faster TTFT (time to first token), at times showing over a 10X benefit. DGX Spark for example was shown taking ~40 second for TTFT versus ~9 seconds with aiDAPTIV; Strix Halo showed ~6 seconds with aiDAPTIV and ~36 seconds without.bit_user said:Well, embedding it in the SSD controller's firmware is one way to make sure they stay in the value chain. However, I do genuinely wonder how much benefit it gets from being there. SSDs don't have particularly fast CPU cores, and so I'd expect that the caching layer could be implemented purely in software on the host CPU, without much degradation of performance.

I guess the main benefit of integrating into the SSD is that it could occur as part of the normal FTL (Flash Translation Layer), rather than needing to introduce an entirely new layer for it. However, there are even some datacenter SSDs that essentially allow the host processor to do all the FTL stuff.

The other use case is for fine-tune training of models. The standard NVIDIA approach is to have everything loaded into GPU memory, so if you want to fine-tune a 70B parameter LLM as an example, you need ~20X that much memory. 1.4 TB would thus require something like eighteen H100, ten H200, eight B200, or five B300 GPUs just to satisfy the VRAM requirements. With aiDAPTIV, the process gets broken up into chunks that can fit within the available GPU memory (though generally I think it still needs at least a 16GB GPU for models that have 8B or more parameters). There's only about a ~10 percent performance loss by going this route, with the benefit being the potential to fine-tune much larger models on relatively modest hardware.

At SC25, as a more extreme example, Phison demonstrated fine-tuning of Llama 3.1 405B using just two RTX Pro 6000 GPUs. So that's 192GB of total VRAM, for a task that would normally need around 8 TB of memory. It took about 25 hours per epoch, doing full FP16 precision training, using an 8TB aiDAPTIV SSD for caching. The cost of the server/workstation in this case was around $50,000. Using 8x GPUs per server with NVIDIA AI GPUs would have a cost closer to $4 million (give or take, as NVIDIA isn't exactly forthcoming on pricing). And you could do the training on premises, rather than going the typical cloud route, which a lot of companies are interested in doing.

For fine-tuning, aiDAPTIV SSDs are configured as SLC NAND. (It's a firmware thing, where TLC NAND stays in pure pSLC mode.) Instead of ~5,000 program/erase cycles in TLC mode, the NAND gets about 60,000 PE cycles. Combined with overprovisioning, the aiDAPTIV SSDs are rated for 100 DWPD (drive writes per day). Now, if you're wondering if that's even necessary, I wondered the same thing.

I did some testing, and using an AI100E 320GB drive and training the Llama 3.2 8B LLM, I saw up to 11 TB per hour of writes to the SSD. Companies and individuals generally won't be doing 24/7 fine-tune training, but in theory you could write about 250 TB per day if you were to do that. A typical 2TB consumer SSD will offer around 0.3 DWPD, or 1200 TBW total over five years. In a worst-case scenario, aiDAPTIV could burn out that sort of SSD in a week! LOL. Do note that this means the drives are much more expensive per TB of capacity, as the 320GB drive uses 2TB of raw TLC NAND, the 1TB drive uses 4TB of NAND, and the 2TB drive uses 8TB of TLC NAND. (And the 8TB models like the AI200E are the equivalent of a 32TB SSD!) Given NAND shortages, prices are all a bit up in the air. -

bit_user Hi Jarred, always great to hear from you! Thanks for taking the time to write such a detailed reply!Reply

Awesome stats!JarredWaltonGPU said:This mostly results in much faster TTFT (time to first token), at times showing over a 10X benefit. DGX Spark for example was shown taking ~40 second for TTFT versus ~9 seconds with aiDAPTIV; Strix Halo showed ~6 seconds with aiDAPTIV and ~36 seconds without.

Very cool! Too bad the article made only a passing mention of training.JarredWaltonGPU said:The other use case is for fine-tune training of models.

: (

Did you try giving Anton some nice graphs to include in the article?

Ah yes, good point. It's rare to see such endurance, other than defunct Optane drives (which topped out at PCIe 4.0) and I think the only others are using Kioxia's XL-Flash.JarredWaltonGPU said:For fine-tuning, aiDAPTIV SSDs are configured as SLC NAND. (It's a firmware thing, where TLC NAND stays in pure pSLC mode.) Instead of ~5,000 program/erase cycles in TLC mode, the NAND gets about 60,000 PE cycles. Combined with overprovisioning, the aiDAPTIV SSDs are rated for 100 DWPD (drive writes per day).

Https://www.tomshardware.com/pc-components/ssds/custom-pcie-5-0-ssd-with-3d-xl-flash-debuts-special-optane-like-flash-memory-delivers-up-to-3-5-million-random-iops

Great use case for write-intensive drives!JarredWaltonGPU said:Now, if you're wondering if that's even necessary, I wondered the same thing.

Lastly, thank you for doing your part to help mitigate The Great DRAM Shortage!...although, I guess it does sort of shift DDR5 demand over to NAND. -

JarredWaltonGPU Reply

So, I'm not doing much other than the marketing side of aiDAPTIV... But I will say, the NAND shortages and storage shortages in general this year are going to be rough. Like, it's no longer a discussion of what price companies may charge for SSDs, but rather allocation — are there even enough SSDs to satisfy the desires of big companies like Dell, HP, Lenovo, etc?bit_user said:Lastly, thank you for doing your part to help mitigate The Great DRAM Shortage!...although, I guess it does sort of shift DDR5 demand over to NAND.

And the answer is that no, there are not enough SSDs this year, based on projections. I believe Phison's CEO (or US president, one of the two) has said the expectation is that demand for all storage this year will be about 15~20 percent higher than manufacturing capacity. There's something like 2 zettabytes of demand and only 1.7 zettabytes of supply, including HDDs. Or maybe it's a different number, but basically the industry will fall about 15% short this year of meeting demand.

The problem is that companies can't just spin up more manufacturing. It takes about two years for a NAND production fab to be built, which means if they started now, it would be done in 2028. Several companies didn't invest in more manufacturing capacity a few years back, due to a reduction in demand and lower prices, and that plays into it as well. And HDD companies haven't really put a lot into capex for a while, despite the WD / SanDisk split, which means there's a shortage for enterprise HDD storage as well. So... Buckle your belts, because both NAND and DRAM are going to get even more expensive in the coming months.

Also: aiDAPTIV fine-tune training 405B on two GPUs (It says Chris Ramseyer, who is my boss and former Tom's alumni, but I'm the writer of that one... And the goofball raising his fist in the air.) -

thestryker Reply

All I can think of is all the NAND used in those between the over provisioning and running SLC mode. Of course with >200TB drives coming nothing is going to save the NAND market. Greatly appreciate your insight and the additional details.JarredWaltonGPU said:For fine-tuning, aiDAPTIV SSDs are configured as SLC NAND. (It's a firmware thing, where TLC NAND stays in pure pSLC mode.) Instead of ~5,000 program/erase cycles in TLC mode, the NAND gets about 60,000 PE cycles. Combined with overprovisioning, the aiDAPTIV SSDs are rated for 100 DWPD (drive writes per day). Now, if you're wondering if that's even necessary, I wondered the same thing.

Pour another one out for 3D XPoint. -

SuperPauly IReply

So when you reckon us consumer peasants can get our hands on this tech?JarredWaltonGPU said:So, I'm not doing much other than the marketing side of aiDAPTIV... But I will say, the NAND shortages and storage shortages in general this year are going to be rough. Like, it's no longer a discussion of what price companies may charge for SSDs, but rather allocation — are there even enough SSDs to satisfy the desires of big companies like Dell, HP, Lenovo, etc?

And the answer is that no, there are not enough SSDs this year, based on projections. I believe Phison's CEO (or US president, one of the two) has said the expectation is that demand for all storage this year will be about 15~20 percent higher than manufacturing capacity. There's something like 2 zettabytes of demand and only 1.7 zettabytes of supply, including HDDs. Or maybe it's a different number, but basically the industry will fall about 15% short this year of meeting demand.

The problem is that companies can't just spin up more manufacturing. It takes about two years for a NAND production fab to be built, which means if they started now, it would be done in 2028. Several companies didn't invest in more manufacturing capacity a few years back, due to a reduction in demand and lower prices, and that plays into it as well. And HDD companies haven't really put a lot into capex for a while, despite the WD / SanDisk split, which means there's a shortage for enterprise HDD storage as well. So... Buckle your belts, because both NAND and DRAM are going to get even more expensive in the coming months.

Also: aiDAPTIV fine-tune training 405B on two GPUs (It says Chris Ramseyer, who is my boss and former Tom's alumni, but I'm the writer of that one... And the goofball raising his fist in the air.)

I've been waiting for GPU prices to drop for hmmm, about 6 years now and haven't had a GPU in that time and would love to locally train, experiment and build with local models.

I hate giving money to tech giants for training stuff, something about the ability to do it locally eases my mind, and bank balance.

What tech will it be available in, do you know? Laptops, mini PCs desktops or is it available as a separate module like PCIe, m.2 etc? -

bit_user Reply

Sad to say, if current/recent GPU prices are too rich for your blood, then I'm going to hazard a guess that these SSDs will be, as well. Take a current PCIe 5.0 drive of like 4 TB or 8 TB capacity and add at least a 50% markup. That's if this stuff comes to client M.2 drives. Otherwise, you're looking at a U.2 (or other server form factor) and an even bigger markup.SuperPauly said:So when you reckon us consumer peasants can get our hands on this tech?

I've been waiting for GPU prices to drop for hmmm, about 6 years now and haven't had a GPU in that time and would love to locally train, experiment and build with local models.

Then, you'll also need a dGPU of like RTX 5080 or RTX 5090 caliber. Training is far more compute-intensive than inferencing.

Of course, that's just speculation, on my part.