Nvidia buys AI chip startup Groq's assets for $20 billion in the company's biggest deal ever — Transaction includes acquihires of key Groq employees, including CEO

GroqCloud will continue operations as is.

Get 3DTested's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Nvidia, the largest GPU manufacturer in the world and the linchpin of the AI data center buildout, has entered into a non-exclusive licensing agreement with AI chip rival Groq to use the company's intellectual property. The deal is valued at $20 billion and includes acquihires of key employees within the firm who will now be joining Nvidia. The firm spent $7 billion for Israeli chip company Mellanox in 2019, so the record has now been toppled.

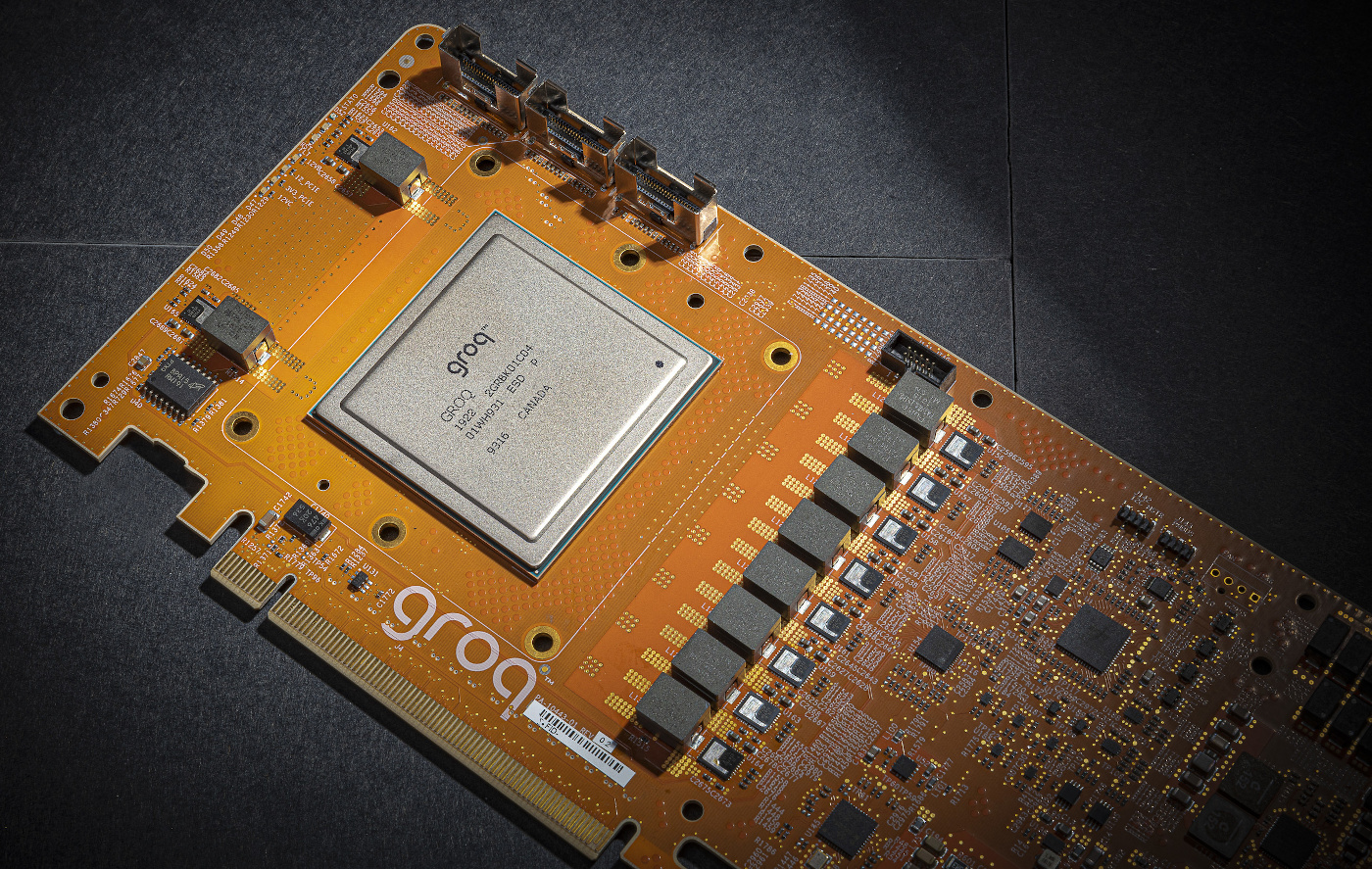

Groq is an American AI startup developing Language Processing Units (LPUs) that it positions as significantly more efficient and cost-effective than standard GPUs. Groq's LPUs are ASICs, which are seeing growing interest from many firms due to their custom design, which is better suited to certain AI tasks, such as large-scale inference. Groq argues that it excels in inference, having previously called it a high-volume, low-margin market.

Nvidia is the largest benefactor of the AI boom because it supplies most of the world's data centers and has deals with essentially every AI constituent. Groq has accused Nvidia in the past of predatory tactics over exclusivity, claiming that potential customers remain fearful of Nvidia's inventory allotment if they're found talking to competitors, such as Groq, historically. Those concerns seem to have been laid to bed with the deal.

“We plan to integrate Groq’s low-latency processors into the NVIDIA AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads... While we are adding talented employees to our ranks and licensing Groq’s IP, we are not acquiring Groq as a company.” — Jensen Huang, Nvidia CEO (as per CNBC).

Earlier this year, Groq built its first data center in Europe to counter Nvidia's AI dominance, shaping up to be an underdog story that challenged a behemoth on cost-to-scale. Now, Groq's own LPUs will be deployed in Nvidia's AI factories, as the license covers "inference technology," according to SiliconANGLE.

As part of this transaction, Groq founder and CEO Jonathan Ross and president Sunny Madra will be hired by Nvidia, along with other employees. Ross previously worked at Google, where he helped develop the Tensor Processing Unit (TPU). Simon Edwards, Groq's current finance chief, will step up as the new CEO under this refreshed structure.

Acquiring a company's think tank like this is referred to as an acquihire. Among tech firms, it's a common way to evade antitrust scrutiny while gaining access to a company's assets/IP. Meta's AI hiring sprees also fall under this category, along with Nvidia's recent recruitment of Enfabrica's CEO.

Get 3DTested's best news and in-depth reviews, straight to your inbox.

The announcement characterizes the deal as a non-exclusive agreement, meaning Groq will remain an independent entity, and GroqCloud, the company's platform through which it loans its LPUs, will continue to operate as before. Before this deal, Groq was valued at $6.9 billion in September of this year and was on pace to report $500 million in fiscal revenue.

Follow 3DTested on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

-

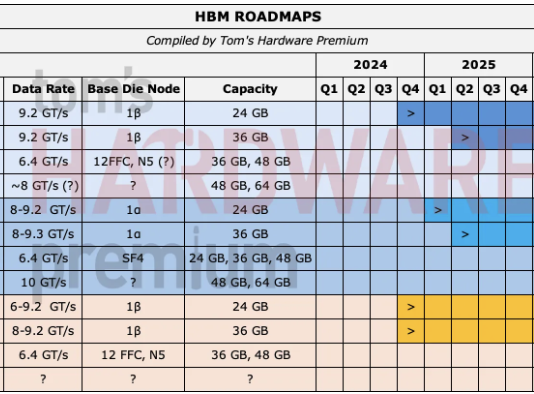

jp7189 I've never quite understood groq's magic sauce, but they use on-board sram measured in MB rather than using stacks of expensive HBM measured in GB and still knock it out of the park for LLM specific tasks. Im kinda sad to see Nvidia gobble them up because they seemed to have something truly different, and I'm actually fan of competition.Reply -

bit_user Reply

It's basically what all the dataflow ASICs do. It was great with convolutional neural networks, but kinda broke with transformers. I think you have to scale up to a large number of chips, for it it to work with LLMs, which is another aspect they focused on. Unlike Tenstorrent, they went with a more tightly-coupled chip-to-chip interface.jp7189 said:I've never quite understood groq's magic sauce, but they use on-board sram measured in MB rather than using stacks of expensive HBM measured in GB and still knock it out of the park for LLM specific tasks.

BTW, they don't say exactly how much SRAM they've got, but they say "hundreds of MBs", which is on the order of what Nvidia now has. I think Groq might still have at least an order of magnitude higher SRAM per TOPS, though.

Nvidia had seemed to be moving in this direction, with NVDLA, but then they reversed course and excluded it from their latest SoCs.Jp7189 said:Im kinda sad to see Nvidia gobble them up because they seemed to have something truly different, and I'm actually fan of competition.

More info on their tech is here:

https://groq.com/lpu-architecture

See also:

https://www.nextplatform.com/2022/03/01/groq-buys-maxeler-for-its-hpc-and-ai-dataflow-expertise/ -

bit_user Reply

Not really, IMO. Because it's Nvidia, it's already "AI money". It would be different, if it were an outside funding source.LordVile said:More money shovelled onto the pyre -

LordVile Reply

Depends how it’s fundedbit_user said:Not really, IMO. Because it's Nvidia, it's already "AI money". It would be different, if it were an outside funding source. -

bit_user Reply

It's Nvidia, dude. Cash transaction, I'm sure. They have tons of it and shareholders wouldn't want any share price dilution from a stock transaction.LordVile said:Depends how it’s funded