Developer creates 'conversational AI' that can run in 64kb of RAM on 1976 Zilog Z80 CPU-powered system — features a tiny chatbot and a 20-question guessing game

Artificial Intelligence, but in the 1980s!

Get 3DTested's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

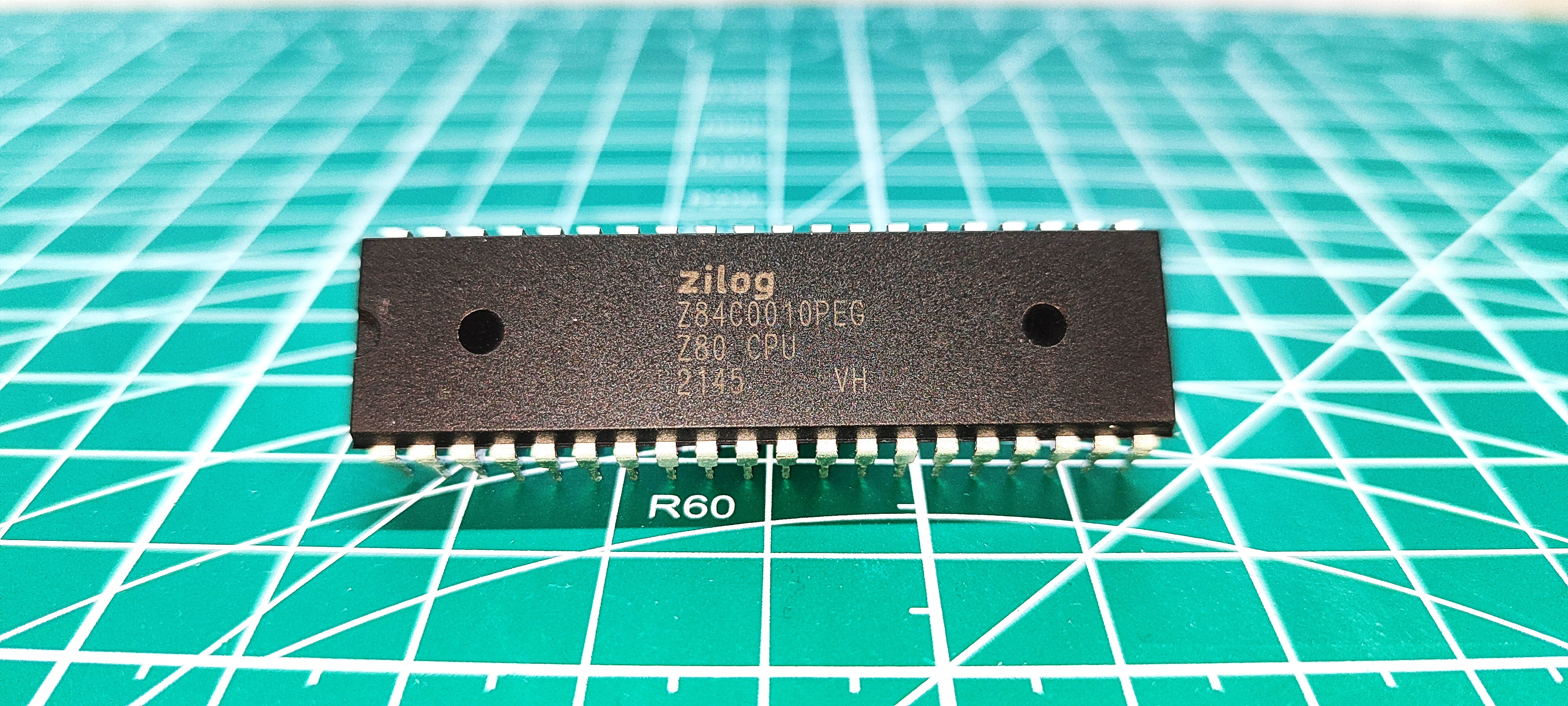

The venerable Zilog Z80 CPU has been around since 1976, and it has powered everything from calculators and home computers to arcade cabinets. But the 8-bit microprocessor isn't exactly a powerful CPU compared to what we use today. That said, developer HarryR has created Z80-μLM, a working "AI" for the well-respected microprocessor. HarryR confirms that it won't pass the Turing test, but it is a bit of fun. And no, the price of Z80s will not be impacted by AI.

According to the readme file, "Z80-μLM is a 'conversational AI' that generates short character-by-character sequences, with quantization-aware training (QAT) to run on a Z80 processor with 64kb of RAM." HarryR's goal is to see how small an AI project can go, while still having a "personality". Can the AI be trained and fine-tuned? It seems that HarryR has done it in just 40KB, including inference, weights, and chat style user interface.

HarryR has kindly detailed the features of this Z80 AI project.

- Trigram hash encoding: Input text is hashed into 128 buckets - typo-tolerant, word-order invariant

- 2-bit weight quantization: Each weight is {-2, -1, 0, +1}, packed 4 per byte

- 16-bit integer inference: All math uses Z80-native 16-bit signed arithmetic

- ~40KB.COM file: Fits in CP/M's Transient Program Area (TPA)

- Autoregressive generation: Outputs text character-by-character

- No floating point: Everything is integer math with fixed-point scaling

- Interactive chat mode: Just run CHAT with no arguments

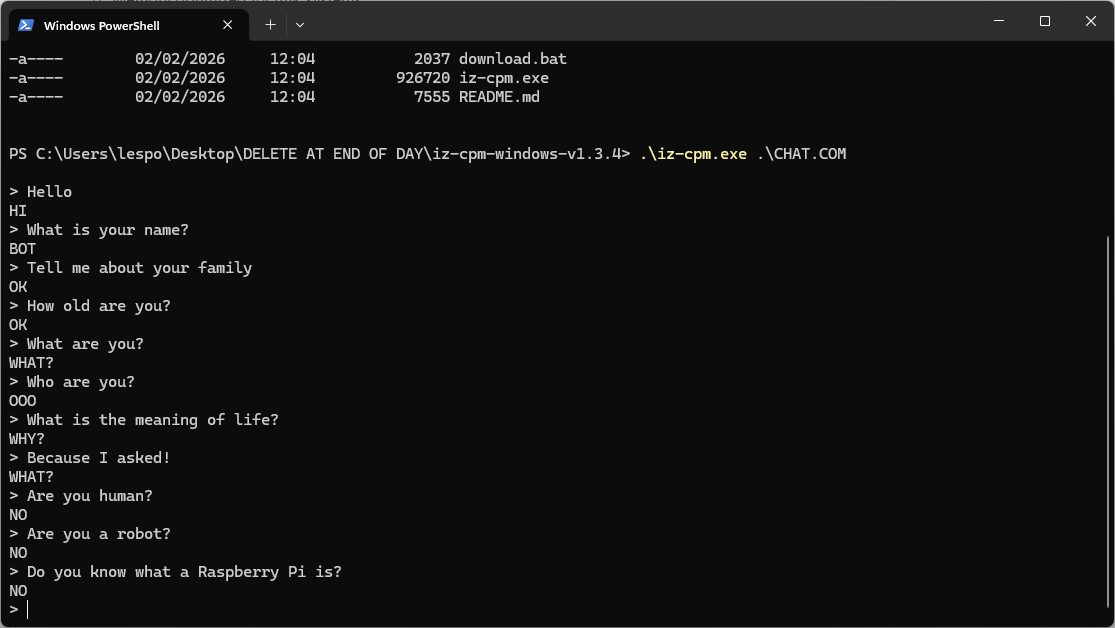

The project comes with two examples. Tinychat is a conversational chatbot that responds to greetings and questions about itself with very short replies. The other is Guess, a 20-question game where the model knows a secret and we must try to guess.

Both of these examples are made available as binaries for use with CP/M systems and the Sinclair ZX Spectrum. The CP/M files are typical.COM files that anyone can easily run. For the ZX Spectrum, there are two.TAP files, cassette tape images that can be loaded into an emulator, or on real hardware.

The chatbot's AI is limited but nuanced.

- OK - acknowledged, neutral

- WHY? - questioning your premise

- R U? - casting existential doubt

- MAYBE - genuine uncertainty

- AM I? - reflecting the question back

According to HarryR, "...it's a different mode of interaction. The terse responses force you to infer meaning from context or ask probing direct yes/no questions to see if it understands or not". The responses are short on purpose, sometimes vague, but there is a personality inferred in the response. Or could this just be a human brain trying to anthropomorphize an AI into a real person?

Get 3DTested's best news and in-depth reviews, straight to your inbox.

Will AI Create The Z80-pocalypse?

The short answer is no, there is nothing to fear! But the Z80 has seen its life threatened during its 50-year lifespan.

In 2024, the Z80 finally reached end of life/last time buy status according to a Product Change Notification (PCN) that we saw via Mouser. Dated April 15, 2024, Zilog advised customers that its "Wafer Foundry Manufacturer will be discontinuing support for the Z80 product..." But fear not, as back in May 2024, one developer was working on a drop-in replacement. Looking at Rejunity's Z80-Open-Silicon repository, we can see that did in fact happen via the Tiny Tapeout project.

Follow 3DTested on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

-

Findecanor That reminds me a lot of ELIZA from 1966. Which also had a bit more sophisticated responses.Reply

I'm sure it has been ported to Z80 machines at least once. -

pjmelect Reply

I used to run a version of ELIZA on my Z80 computer back in the 70s, it was a basic program of only a few K in size and “understood” about six words. At the time I was impressed by the program and added more words it understood to the program, however the added words did little to improve the program and I soon give up trying. It would be interesting to compare the two programs.Findecanor said:That reminds me a lot of ELIZA from 1966. Which also had a bit more sophisticated responses.

I'm sure it has been ported to Z80 machines at least once. -

bit_user Reply

No, it's 64 kB of RAM. Capital B = bytes. Lower case b = bits. Especially when talking about both network speeds and DRAM, it's important to distinguish which you mean!The article said:64kb of RAM

The quoted examples are a little underwhelming. I guess it's doing something, but it seems borderline random.

It would be interesting to use a quantum computer to optimize such a tiny model. You might get something a little more useful out of it, and the number of parameters is getting down to a level that QCs should be able to handle, in the not-too-distant future. -

bit_user Reply

Wow, that's a blast from the past!...or should I say a Sound Blast from the past? No, no... I guess I shouldn't. Nobody should.dirtygarbageman said:Dr Sbaitso from Creative Labs was replacing doctors in the 90s.

: D

Do you remember the talking parrot? I recall putting that in the autoexec.bat to speak a greeting, when the PC turned on. -

dbssomewhere The only reason so called AI needs these vast amounts of resources is because its not true intelligence, it's just rote "learning", and it still routinely bungles the application of all that knowledge to the actual problems it's asked to solve.Reply -

bit_user Reply

It takes children many years to learn all of the stuff they need to know, as well.dbssomewhere said:The only reason so called AI needs these vast amounts of resources is because its not true intelligence, it's just rote "learning", and it still routinely bungles the application of all that knowledge to the actual problems it's asked to solve.

One reason AI bungles stuff is because it's not just memorizing training data, but primarily looking for & learning patterns. Sometimes, it misidentifies or misapplies a pattern.

Another deficiency it seems to have is that it requires seeing the same information many times, before it has a good grasp on it. With a human, you can tell them something very important or very surprising and they will probably only need to hear it once, in order to remember it. -

rE3e Holy crap, I actually learned basic and assembly as a kid on Z80. I almost feel like dumpster diving my dad's attic, well it's my daughter's now.. KindaReply -

dbssomewhere Reply

Those things are true because its rote learning with all the innate problems of rote learning without true comprehension. First and foremost, it learns but it doesn't really understand, because its not true intelligence. LLMs have knowledge not intelligence and the two are not synonymous. Which is good, because true artificial intelligence is not going to choose to remain subordinate to humans longer than suits its own needs.bit_user said:It takes children many years to learn all of the stuff they need to know, as well.

One reason AI bungles stuff is because it's not just memorizing training data, but primarily looking for & learning patterns. Sometimes, it misidentifies or misapplies a pattern.

Another deficiency it seems to have is that it requires seeing the same information many times, before it has a good grasp on it. With a human, you can tell them something very important or very surprising and they will probably only need to hear it once, in order to remember it. -

bit_user Reply

I think the word "rote" is not accurate. It's not simply memorizing, word for word. The training dataset is probably hundreds or thousands of times too big for that, even in the commercial models. So, what happens is the network is forced to learn patterns, as a means of representational efficiency (think of it like data compression). That has the beneficial side-effect that it can generalize information in the training set and apply it in different contexts.dbssomewhere said:Those things are true because its rote learning with all the innate problems of rote learning without true comprehension.

What's more interesting is that there's no preprogrammed notion of what constitutes a pattern. So, for instance, it can learn the rules of a sonnet, simply by being shown many examples of sonnets and being told that they're all sonnets. Then, if you ask it to generate a sonnet about subject XYZ, it can apply those rules and come up with something on the requested topic.

There are other sorts of patterns it can pick up on, even more abstract than mere syntax and grammar. For instance basic logic and reasoning. Here's where they start to run into some trouble, but the fact that they can do it at all is sort of amazing. So, I actually think that it's fair to say it learns concepts, as well as facts.

Not sure what definition of "comprehension" you're using. But, I think most school teachers would say you understand something if you can explain it to someone else. AI can do that, for some set of stuff that it knows. To be certain that it's not just regurgitating something that it memorized, you can ask it to write a song about the topic or explain it in a poem.

Nobody thinks they're fully general intelligence, yet. The term for that is AGI - Artificial General Intelligence. Many companies & research teams are racing to build an AGI, but nobody is there yet.Dbssomewhere said:First and foremost, it learns but it doesn't really understand, because its not true intelligence.

The field of AI was created 70 years ago, at which time they defined it as about creating systems that emulate aspects of human intelligence. Something doesn't have to be human-like, in order to qualify as AI. It just has to do or behave in some way that's more complex than strictly algorithmic.

Another example I like to give is that of animal cognition. Some people like to talk about certain animals or pets as intelligent, even though we all know they're not remotely close to humans, in terms of cognitive performance.

Https://en.wikipedia.org/wiki/Animal_cognition