3DTested Verdict

Corsair’s AI Workstation 300 is a classy, compact, and flexible Ryzen AI Max+ 395 system that boasts plenty of performance for gaming and AI alike. But recent price hikes, software stability issues, and fresh competition in the local AI space all make it tough to recommend for AI work specifically.

Pros

- +

Powerful x86 CPU and Radeon 8086S GPU maximize OS and game compatibility

- +

Sleek, well-ventilated chassis

- +

Versatile storage options

- +

Two-year warranty and Corsair support

Cons

- -

AI performance trails the competition for both LLMs and content creation

- -

Could be a bit quieter under load

- -

Current prices make for a tough sell given the performance on offer

Why you can trust 3DTested

AMD's Ryzen AI Max+ 395, popularly known as Strix Halo, has earned a reputation as the scrappy AI enthusiast platform of choice in the year since its introduction, thanks to its powerful integrated Radeon 8060S graphics and support for RAM pools of up to 128GB.

Corsair's AI Workstation 300 brings Strix Halo to the company’s lineup for the first time. With configuration options ranging up to the flagship Ryzen AI Max+ 395 with 128GB of RAM and two 2TB NVMe SSDs in an aluminum shell that takes up just XX liters, this system is a compact and classy implementation of AMD’s flagship APU.

And thanks to its 16-core, 32-thread Zen 5 x86 CPU, the AI Workstation 300 can offer native gaming support under both Linux and Windows that competitors like Nvidia’s DGX Spark and Apple’s various Macs lack.

Corsair provides plenty of connectivity options for general PC usage, unlike the more focused DGX Spark. The front panel boasts an SD card reader, a USB 4 Type-C port, two USB 3.2 Gen 2 Type-A ports, and a combo audio jack.

The rear panel has a DisplayPort 1.4 connector, an HDMI 2.1 output, another USB 4.0 Type-C port with DisplayPort Alt Mode support, a USB 3.2 Gen 2 Type-A port, two USB 2.0 Type A ports, a 2.5 Gigabit Ethernet jack, and another audio combo jack.

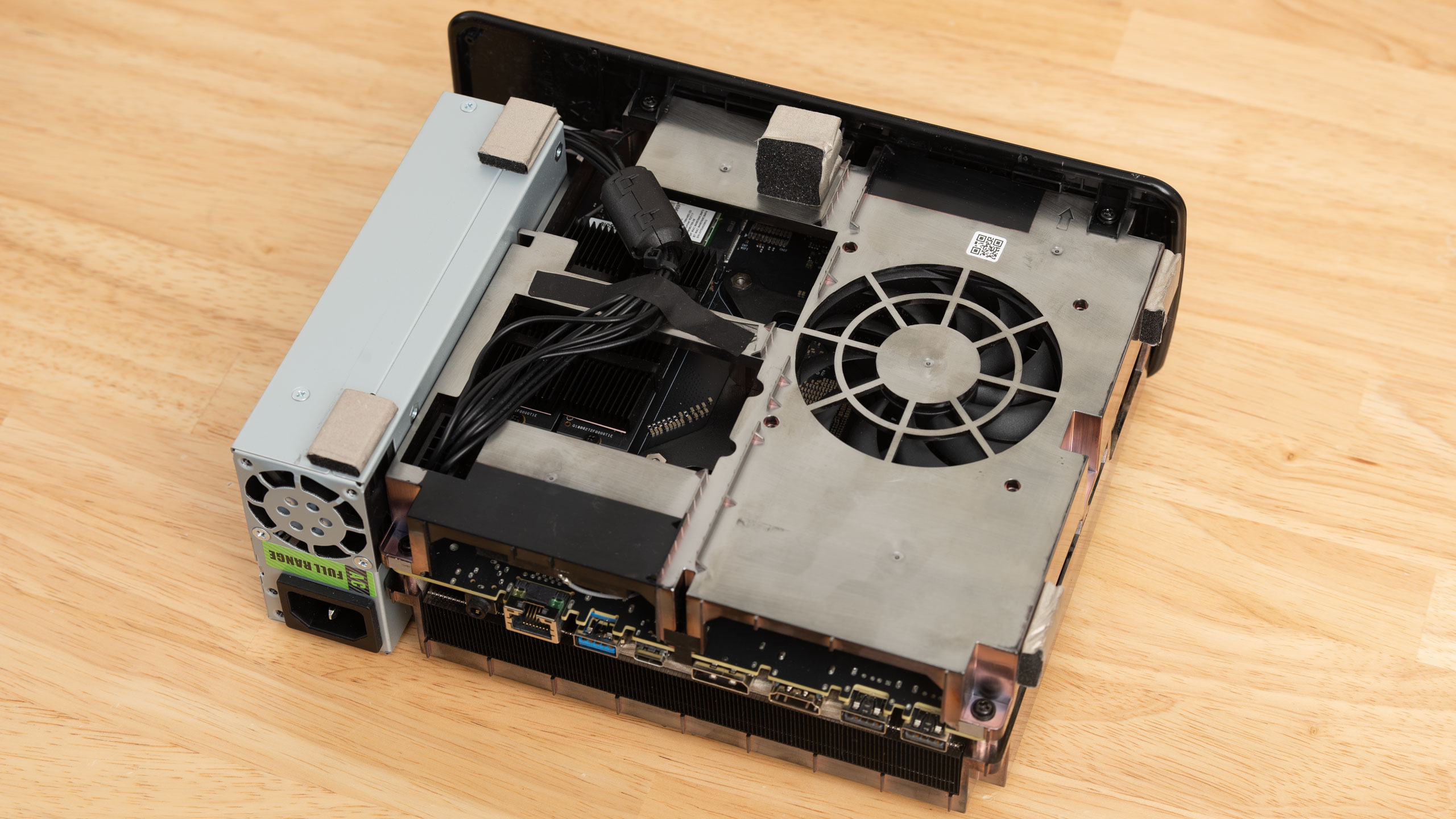

Inside, expansion options are limited due to the highly integrated nature of Strix Halo.

It’s easy enough to remove all of the screws at the back of the unit and slide the integrated motherboard and cooling assembly out of the front of the chassis, but the only potentially user-accessible expansion options are a pair of M.2 2280 slots, both of which are populated with 2TB NVMe SSDs in our review system’s configuration.

Get 3DTested's best news and in-depth reviews, straight to your inbox.

The AI Workstation 300 comes with Windows 11 out of the box, but its dual-SSD config makes it easy to install and dual-boot Linux if Windows isn't your preferred AI development environment, which is quite handy.

Since our recent AI testing relies in part on llama.cpp and ComfyUI, both of which are better documented and whose behavior is better characterized under Linux on AMD platforms, I installed Ubuntu 24.04 LTS on the spare 2TB drive of my AI Workstation 300, and the subsequent process of installing AMD's drivers and the ROCm stack was straightforward enough.

Corsair AI Workstation 300 Specs

CPU | AMD Ryzen AI Max+ 395 (16 cores, 32 threads, up to 5.1 GHz) |

GPU | Radeon 8060S, integrated, 2560 shader cores, up to 96GB dedicated RAM |

RAM | 128GB LPDDR5X-8000 |

Expansion/connectivity | Front: 2x USB 3.2 Gen 2 Type-A, 1x USB 4.0 Type-C, Headphone/Mic Combo Jack, SD Card 4.0 Rear: 2x USB 2.0 Type-A, 1x USB 3.2 Gen Type-A, 1x USB 4.0 Type-C, Headphone/Mic Combo Jack, 1x DisplayPort, 1x HDMI, 1x 2.5 GbE |

Storage | 2x 2TB NVMe SSD |

Power supply | 300W integrated |

A quick look at AI and general desktop performance

Since Corsair positions the AI Workstation 300 as, well, an AI workstation, we put its AI performance to to the test with the llama.cpp model runner for LLMs and ComfyUI for creative workflows under Ubuntu 24.04 LTS, using ROCm 7.1.1 as our compute backend.

We used Nvidia's DGX Spark and its GB10 chip as our primary point of comparison, since its 128GB of RAM, similar memory bandwidth, and compact form factor make it a natural competitor for Strix Halo generally and the AI Workstation 300 specifically.

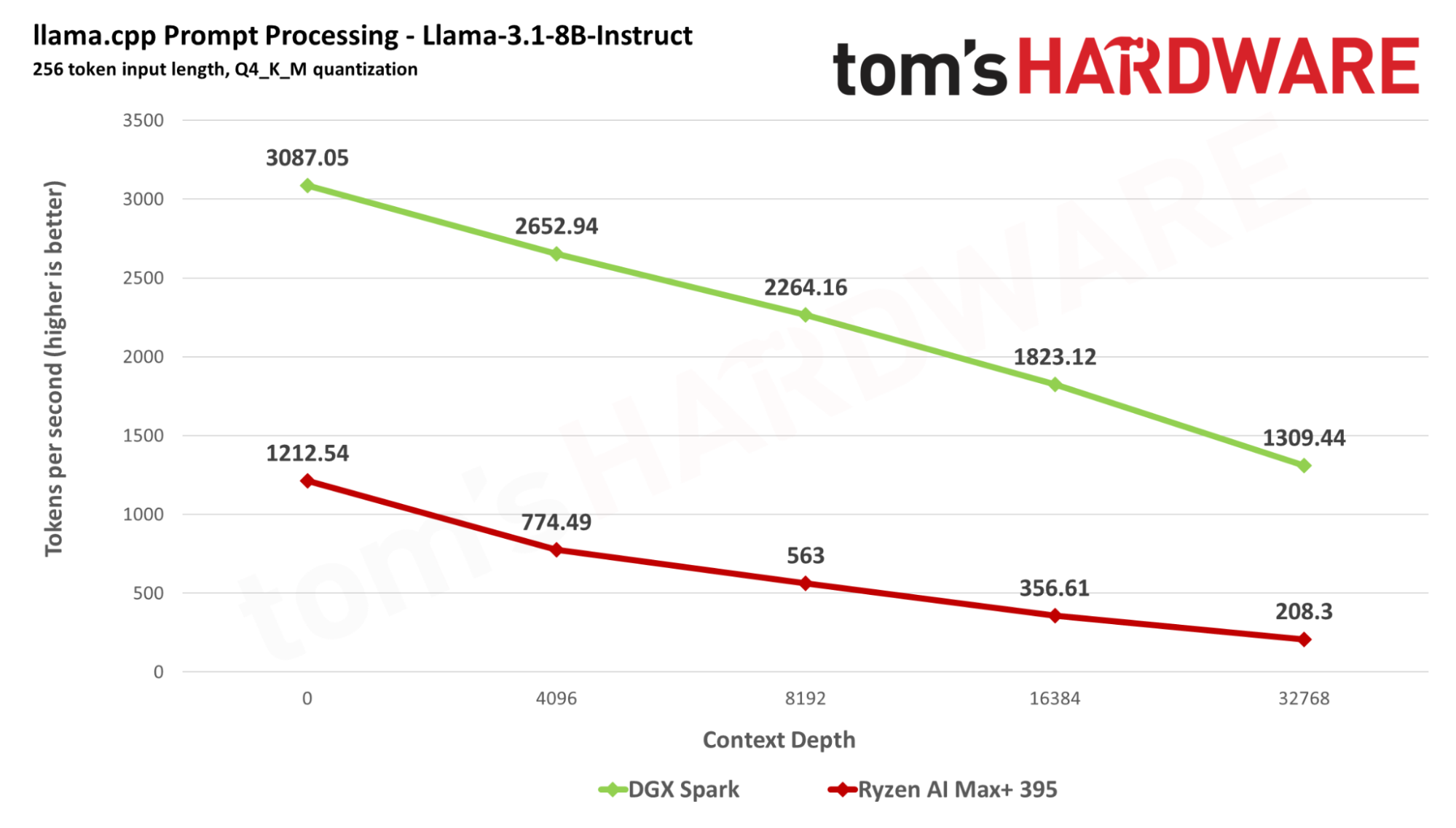

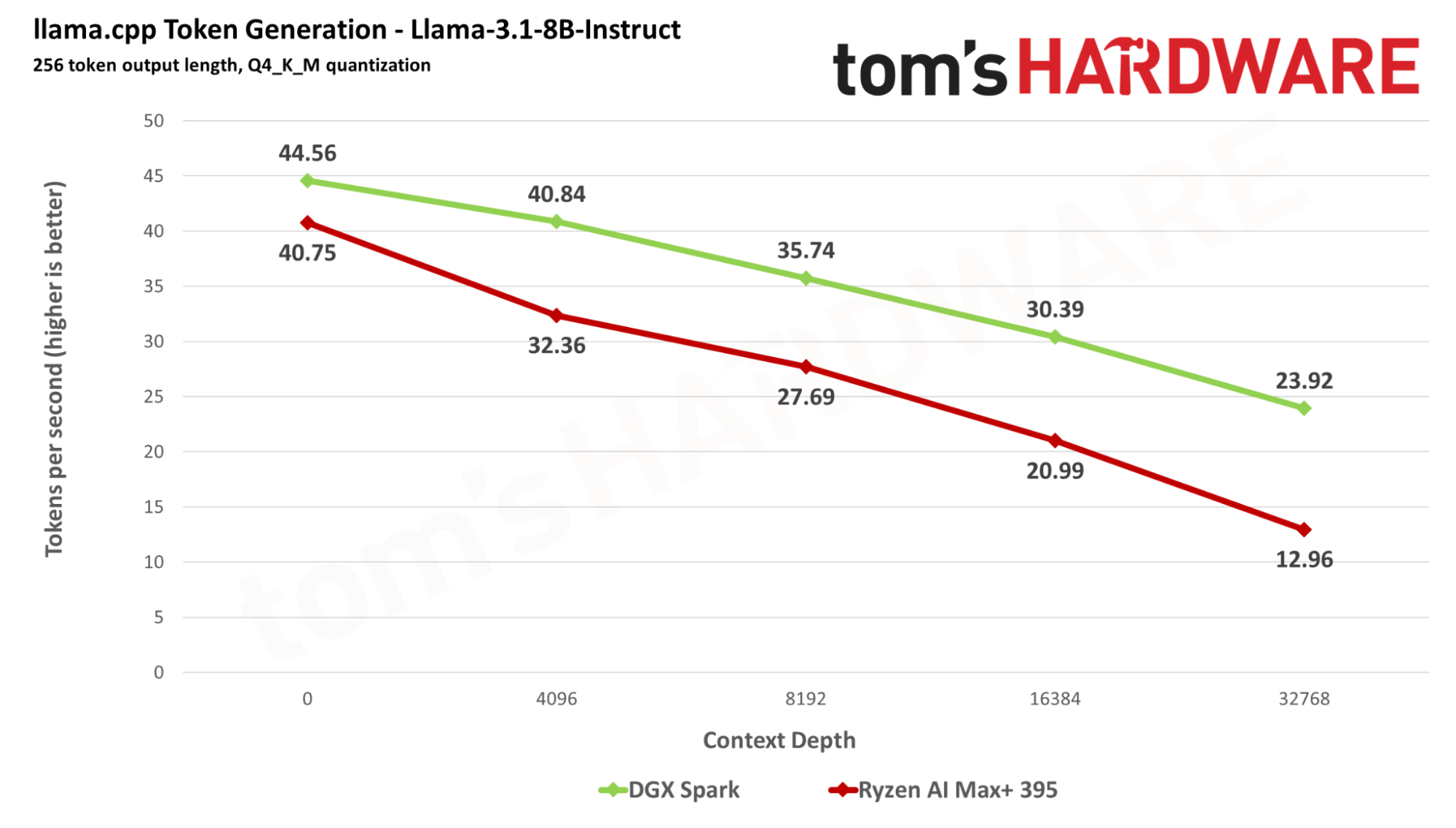

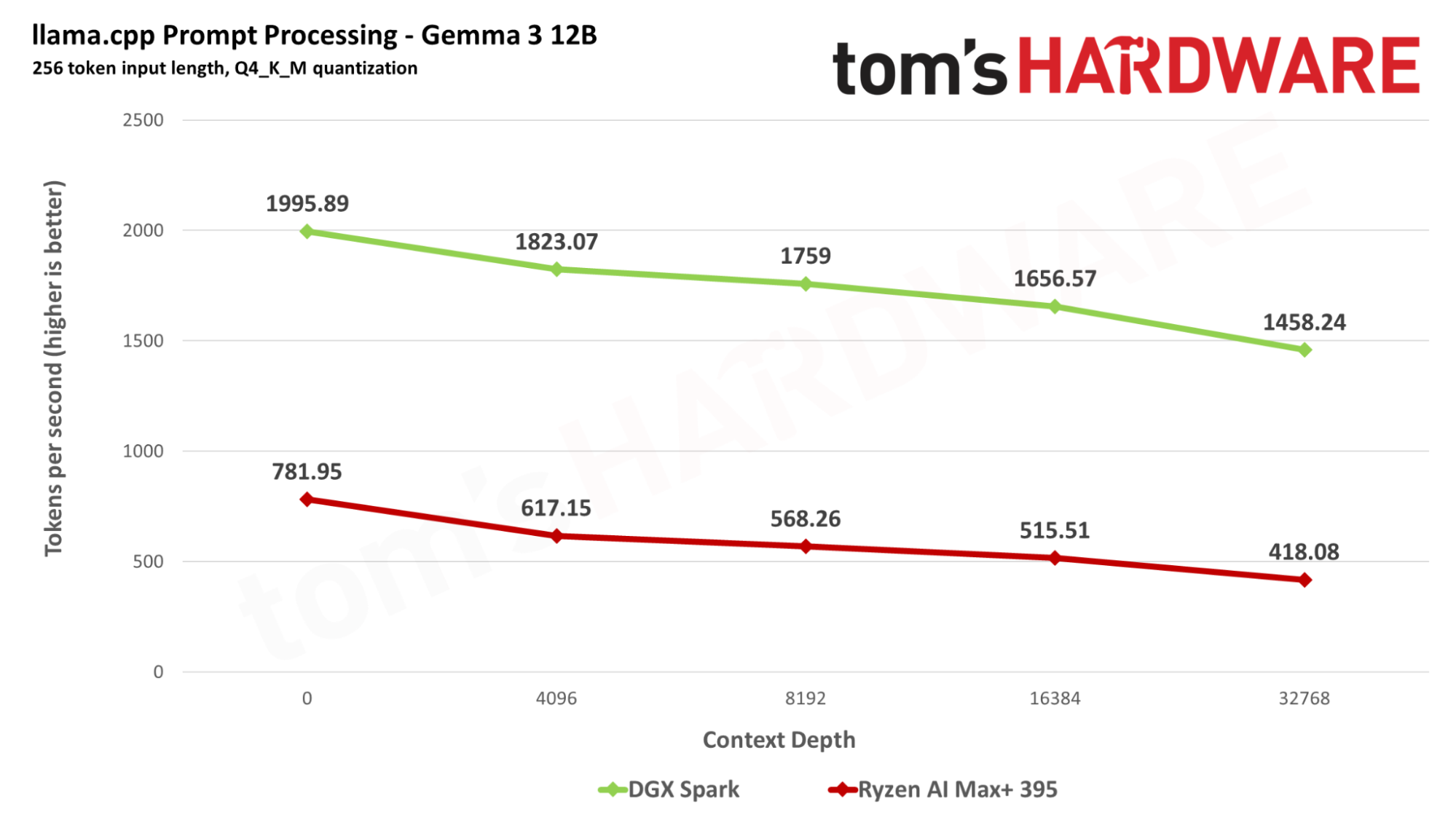

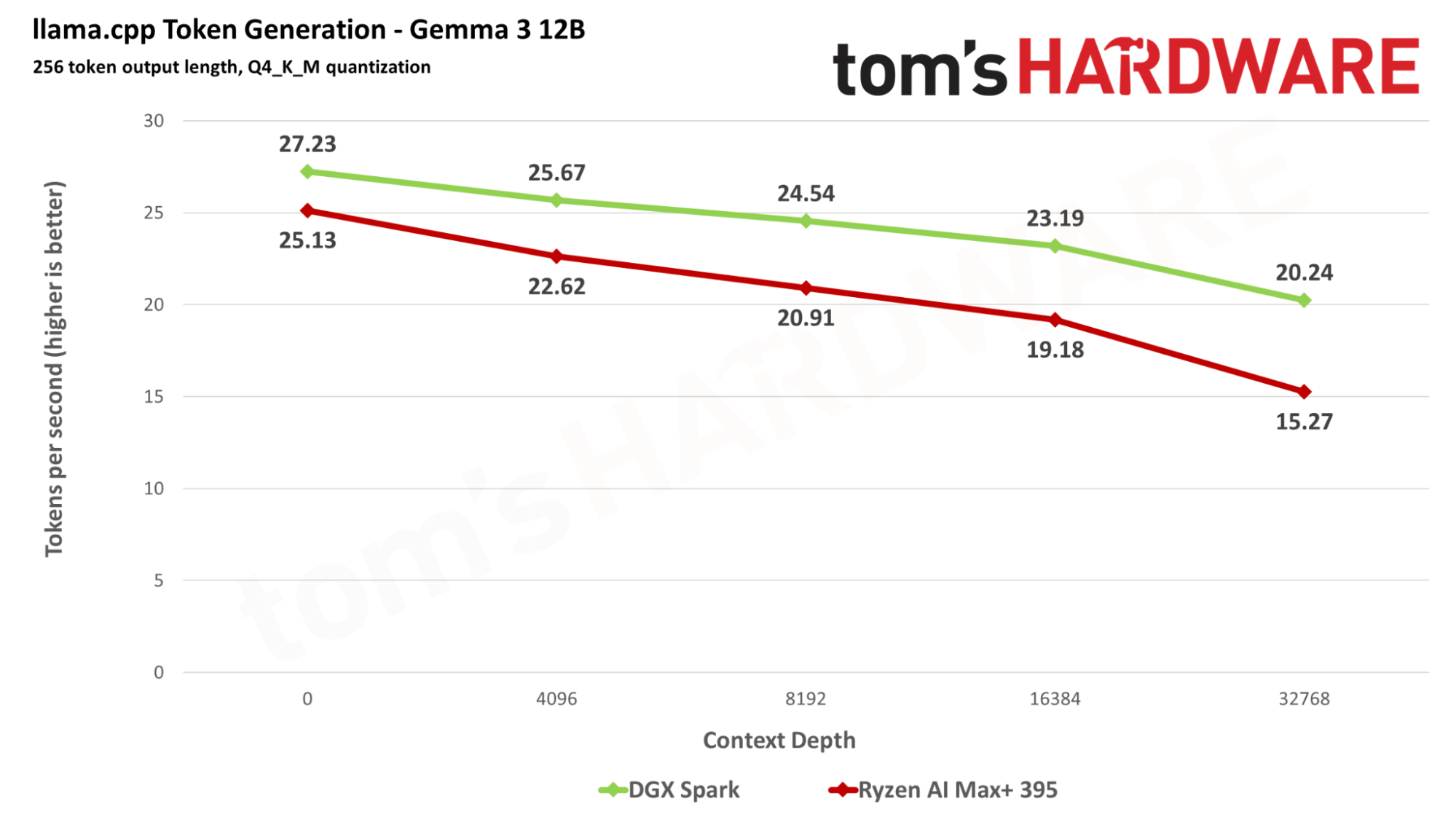

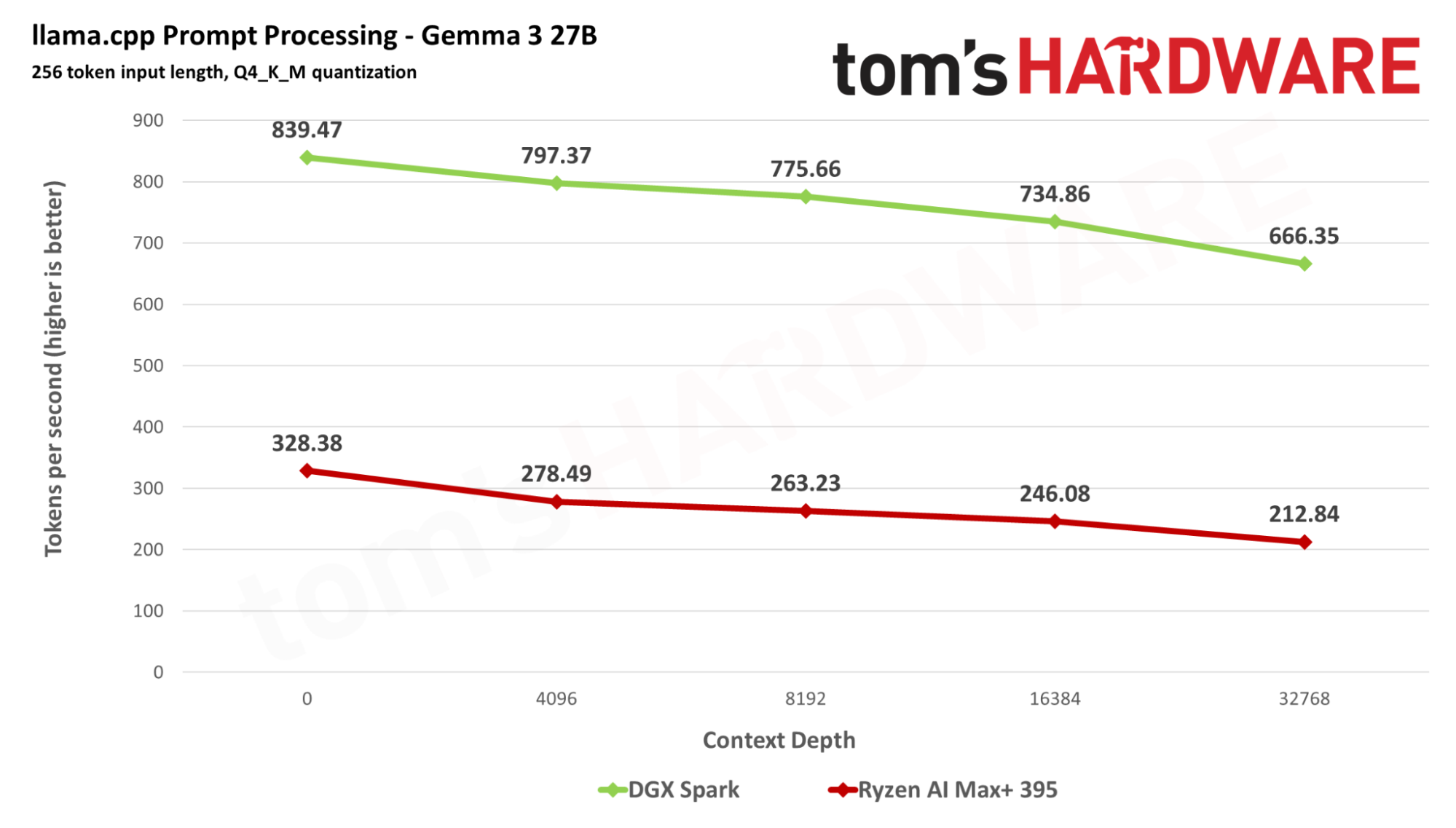

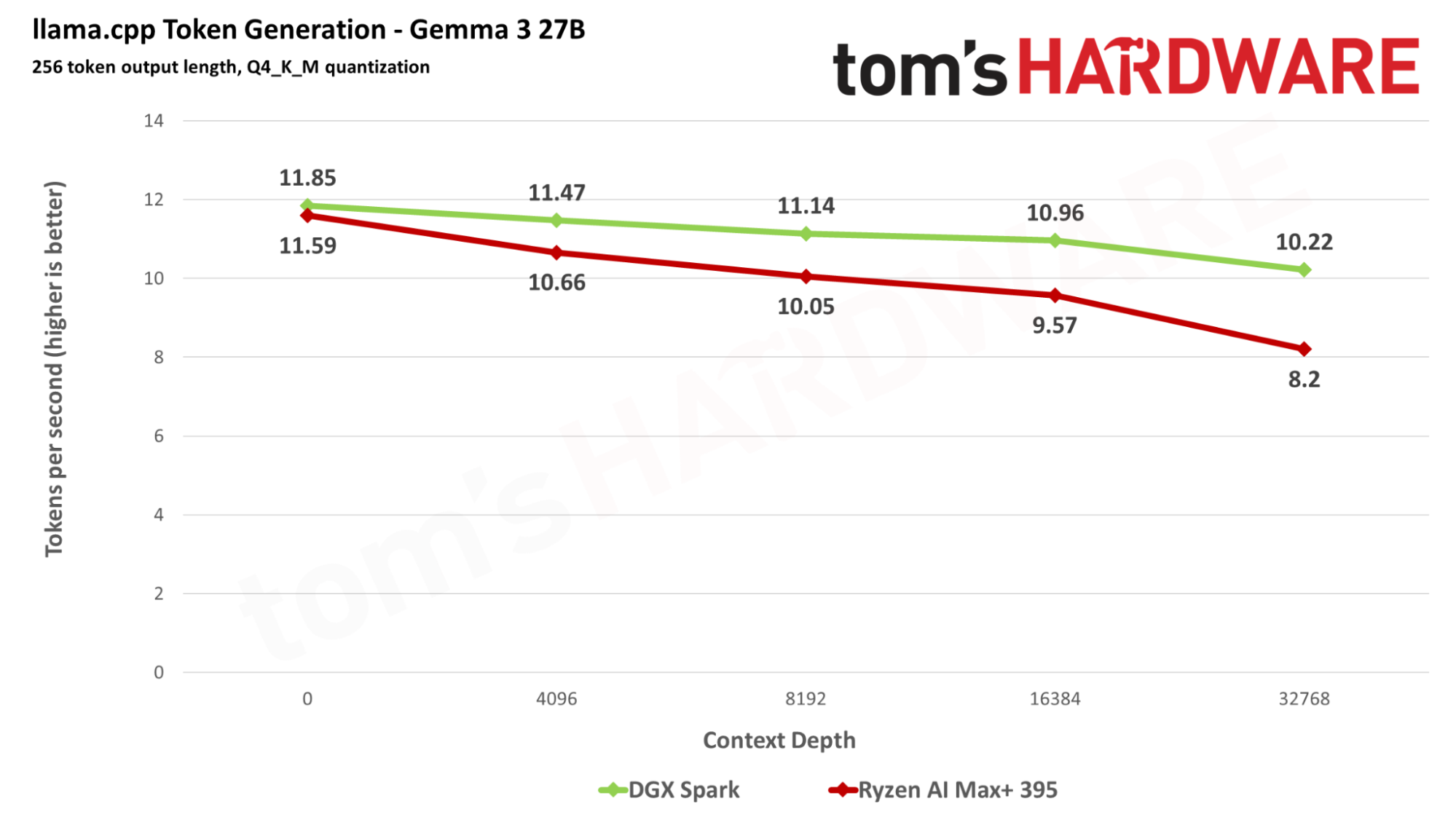

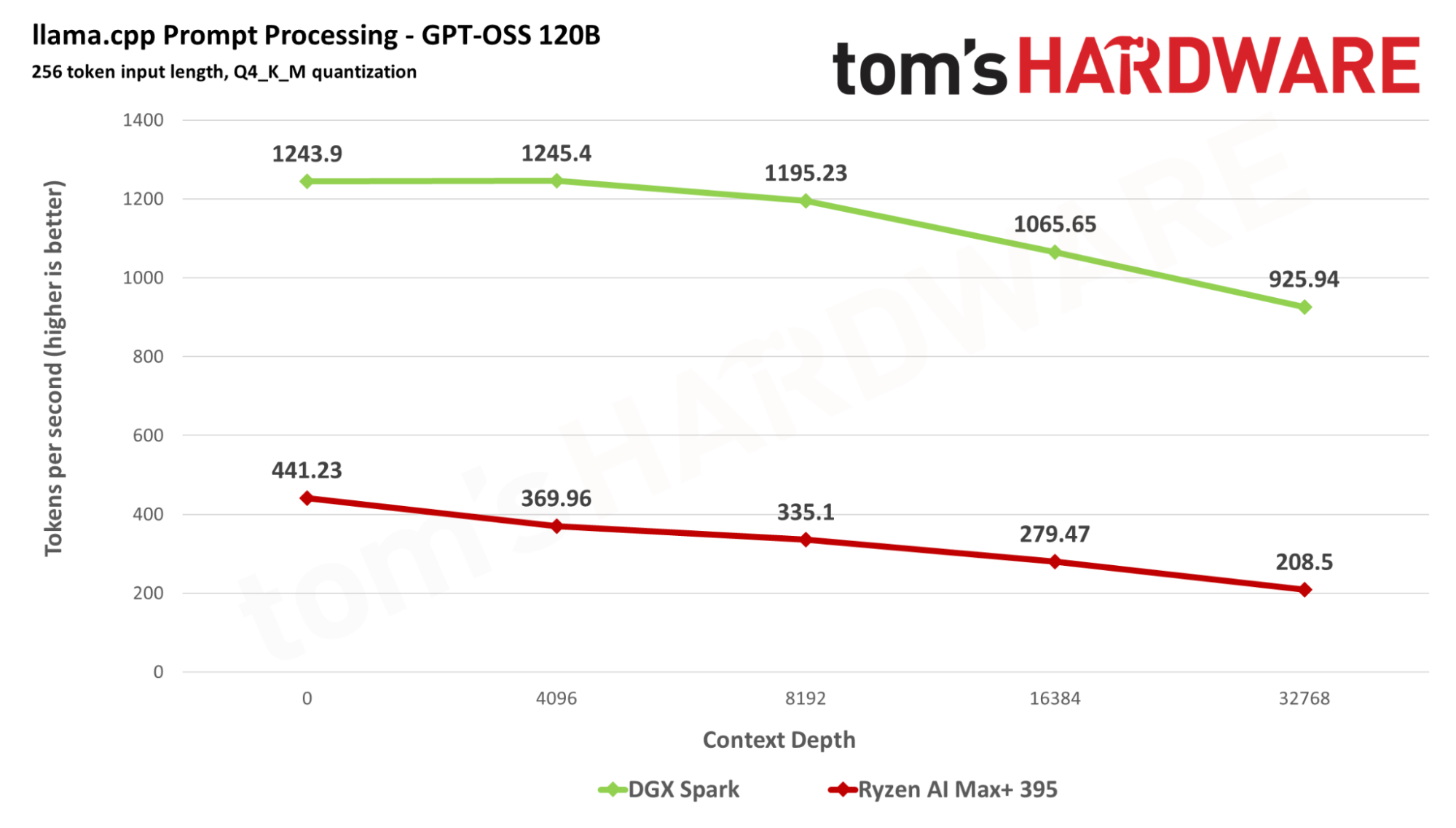

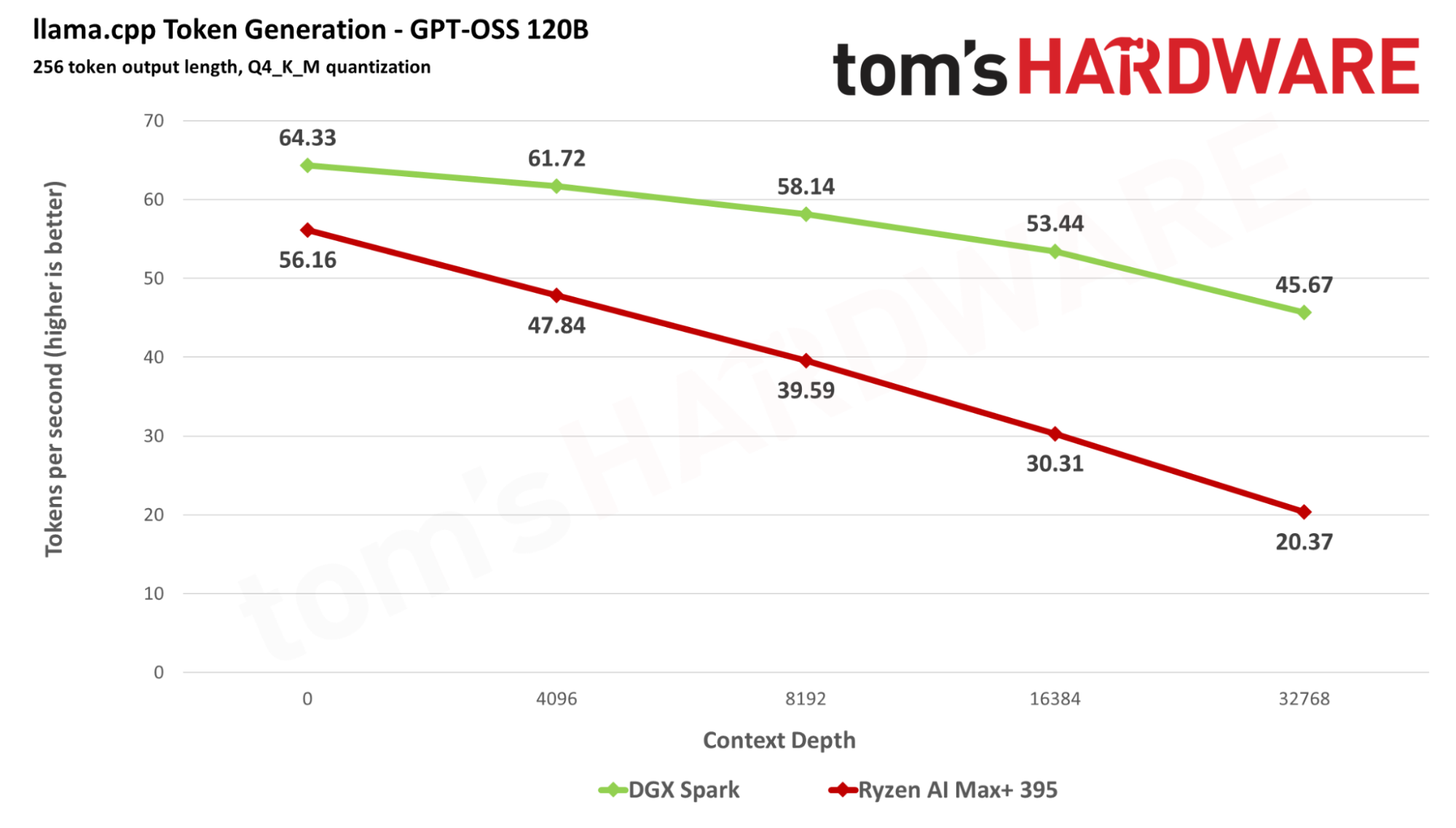

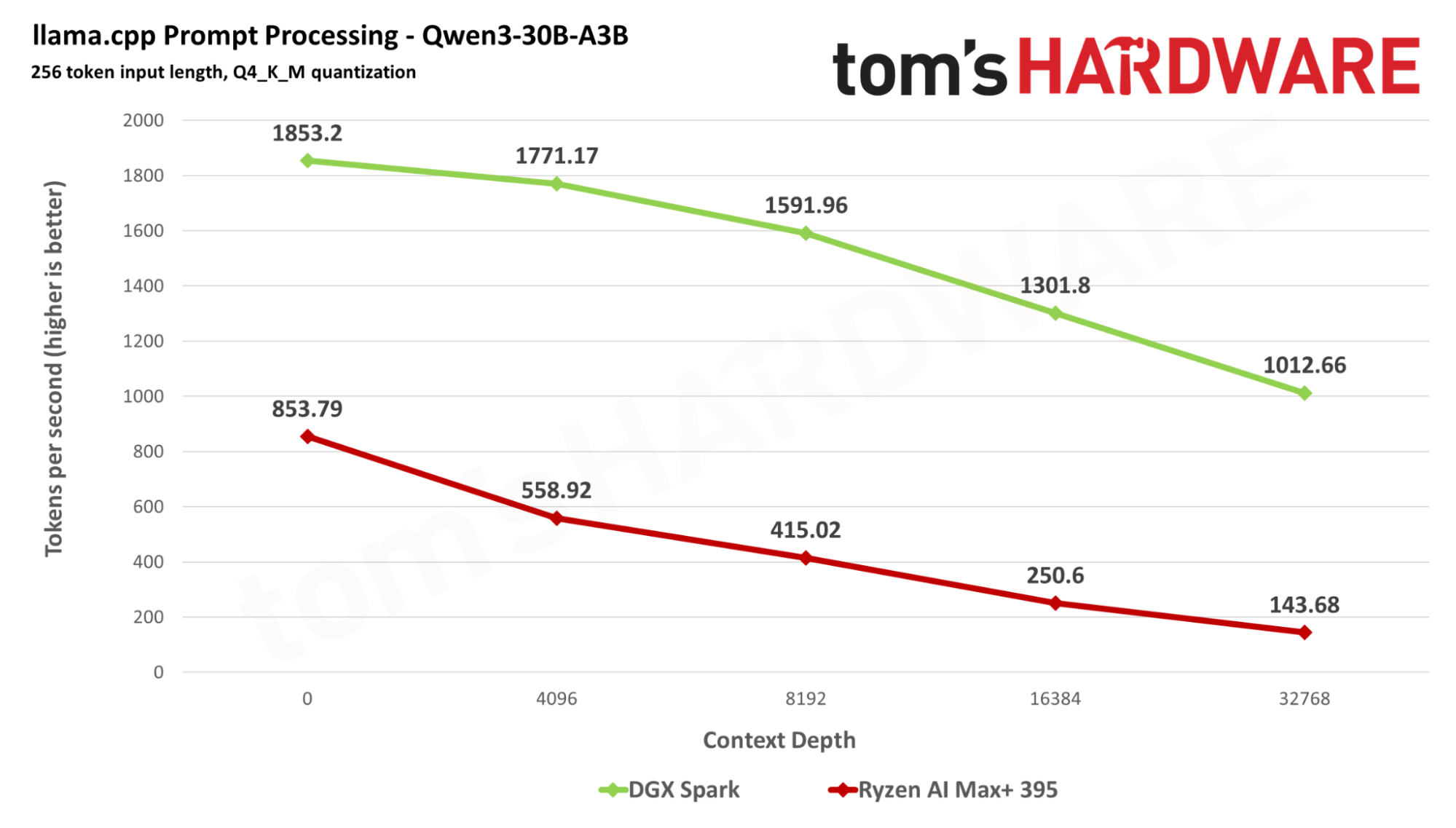

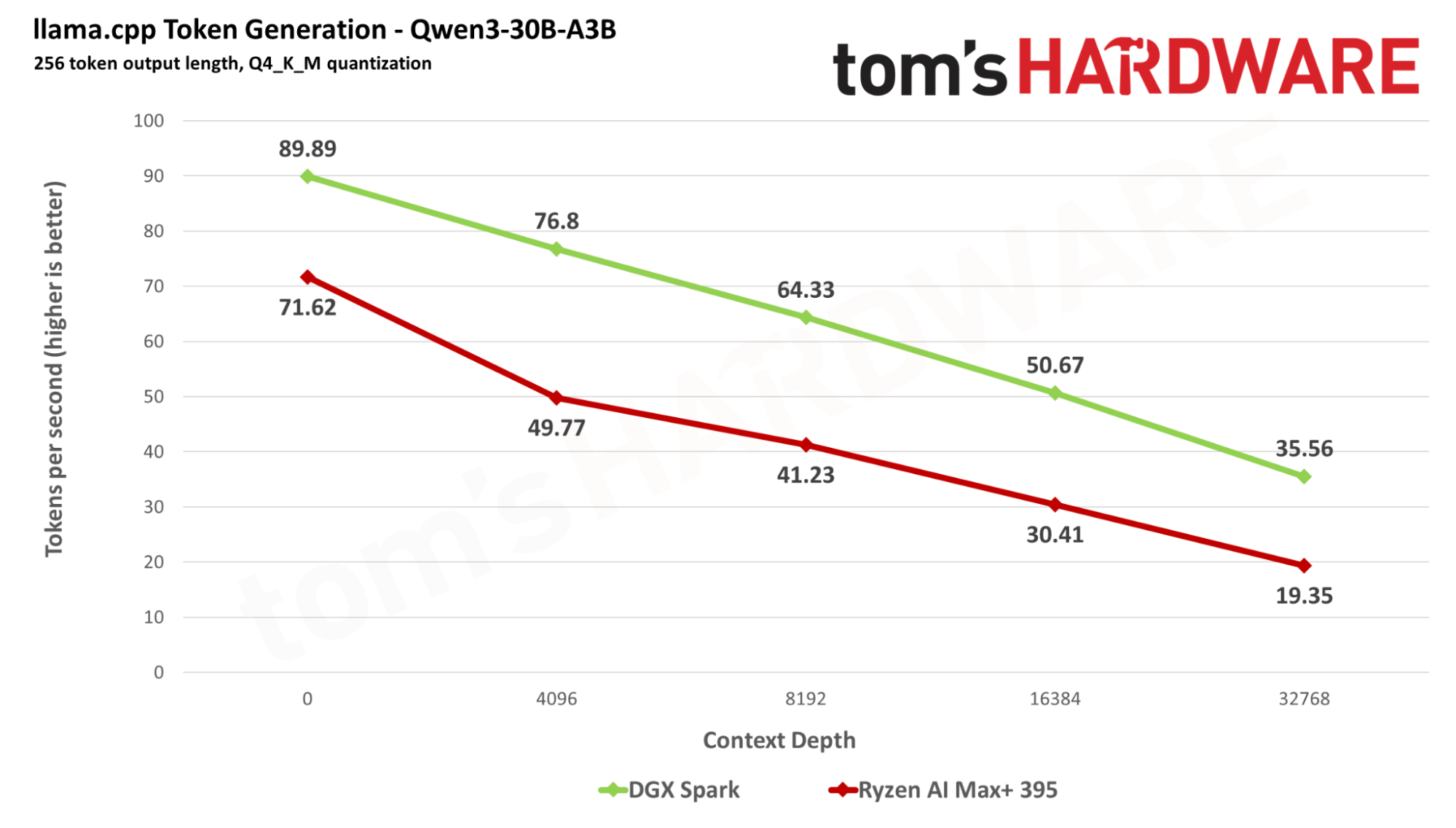

We bench two phases of LLM interaction: prompt processing or prefill for input, and token generation for output. We offer 256 input tokens to each model and generate 256 output tokens across a range of context lengths to illustrate what might happen as one's interactions with a model grow over time.

We’ll start off our benchmarks using Meta’s llama-3.1-8B, a dense LLM (i.e., one for which all parameters are activated per token) whose behavior is well-understood in the current AI landscape.

We extended our dense model testing using Google’s Gemma 3 12B and 27B.

We’ll also look at performance with OpenAI’s gpt-oss 120b and Qwen3-30B-A3B models, which use mixture-of-experts (MoE) architectures that are closer to the state of the art in LLM research.

MoE models can have massive parameter counts, but only a portion of those parameters are activated for any given token. That allows MoEs to balance both capability and performance, although they can still require large amounts of memory. GPT-OSS 120b, for example, requires about 60 GB of RAM.

Across our LLMs, the AI Workstation 300 is behind in the compute-heavy prompt processing phase of our benchmarks due to the lower raw compute horsepower of the Radeon 8060S compared to GB10.

In the memory-bandwidth-dependent token generation phase of the workload, the AI Workstation 300 can put up a good fight when context lengths are short, but as they grow, Nvidia's GB10 maintains higher and more consistent performance.

LLMs are just one usage for AI compute, of course. Image generation is another popular task, and even local video generation is now a possibility thanks to relatively compact text-to-video models like LTX-2.

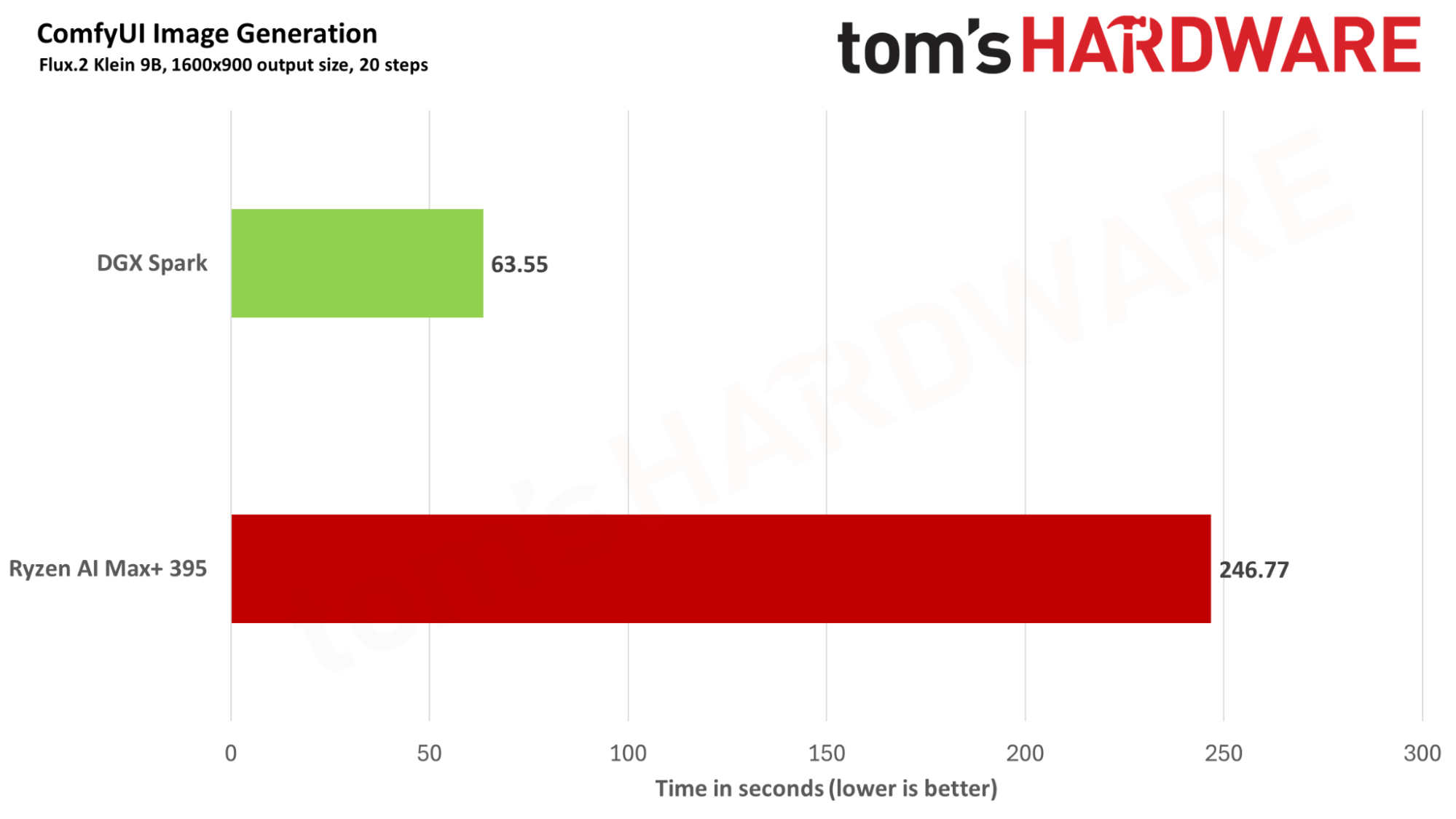

We tested the latest Flux.2 Klein 9B image generation workflow in ComfyUI using the same random seed as a base on both the Spark and the AI Workstation 300. After the first load for the workflow, the AI Workstation 300 needed roughly four times as long to generate an image as GB10 did. That, too, is due in part to GB10's higher raw compute capacity versus the Radeon 8060S.

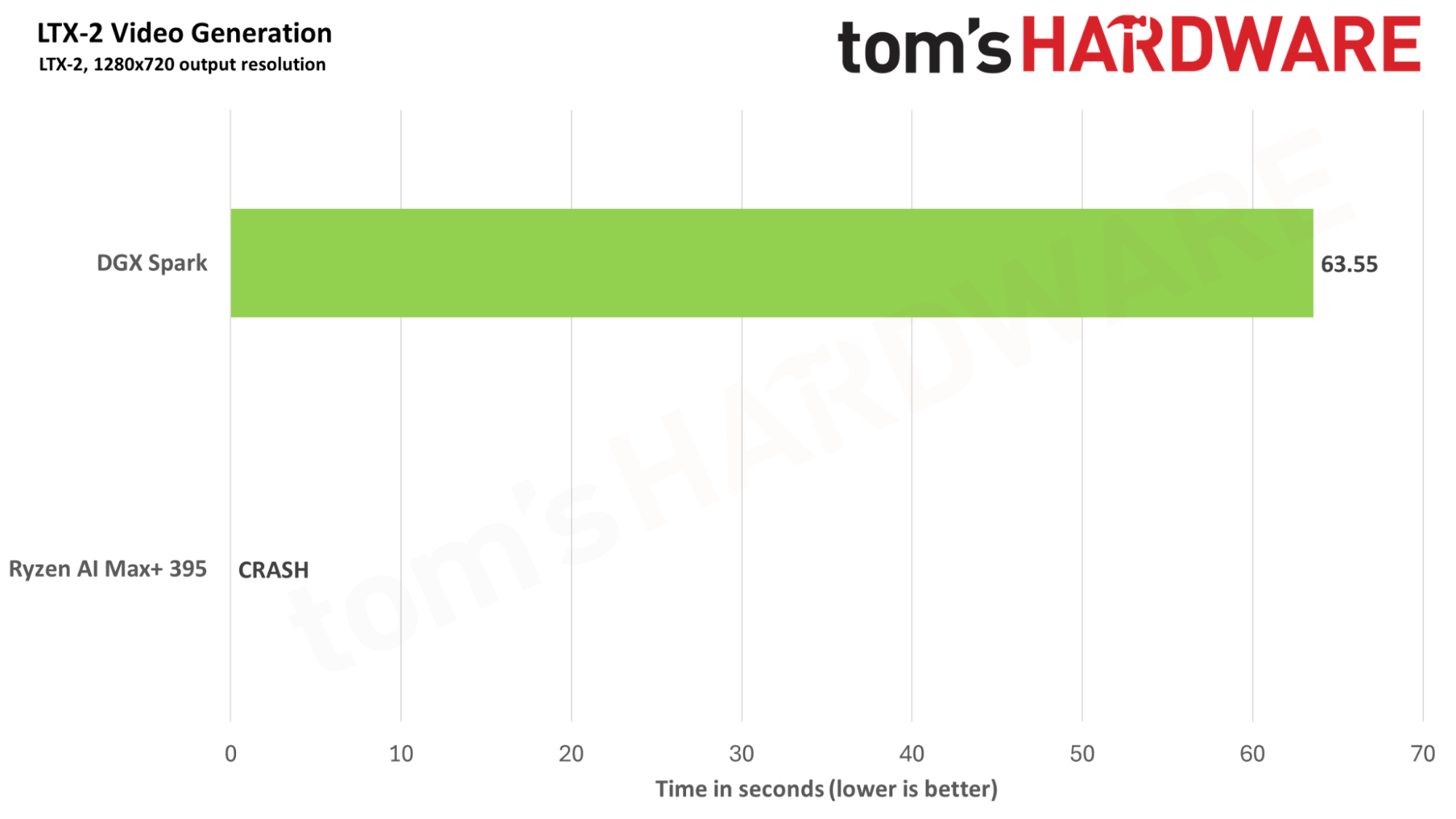

We also gave LTX-2 a try on the AI Workstation 300, but as of the time of our tests, trying to run the same LTX-2 workflow on the ROCm 7.1.1 stack resulted in HIP errors that would hang ComfyUI or crash the entire GNOME desktop environment.

That software immaturity isn’t Corsair’s fault, but it is an unavoidable consequence of building a box around a highly integrated platform like Strix Halo. We’re going to keep an eye on AMD’s continuing efforts to improve ROCm stability and performance, and we’ll see how it changes the appeal of client AI platforms like the AI Workstation 300 and Strix Halo in 2026.

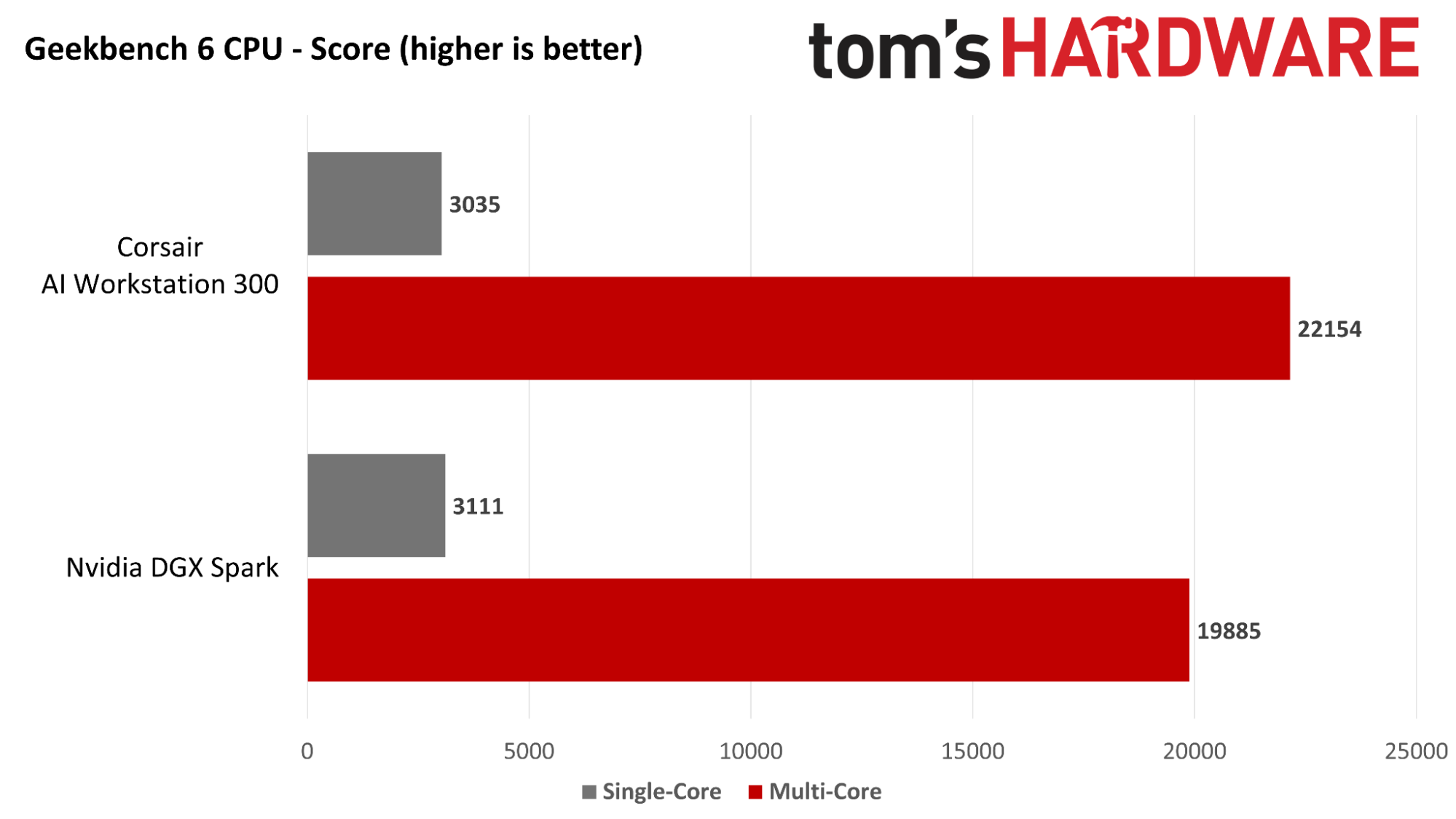

AI isn’t the only reason one might want a local workstation like this, though. The 16 cores and 32 threads of the Ryzen AI Max+ 395 could potentially outrun the 10 performance and 10 efficiency cores of the DGX Spark’s Arm CPU, so we did some supplemental testing with Geekbench 6 under Ubuntu 24.04 to find out.

The Cortex-X925 performance cores and the Zen 5 cores in the Ryzen AI Max+ 395 are closely matched in the single-threaded portion of Geekbench 6. However, the Ryzen AI Max+ 395 pulls 11% ahead in the multi-threaded portion of the benchmark. That means the AI Workstation 300 will be somewhat faster than the DGX Spark in general-purpose parallel computing tasks like code compilation.

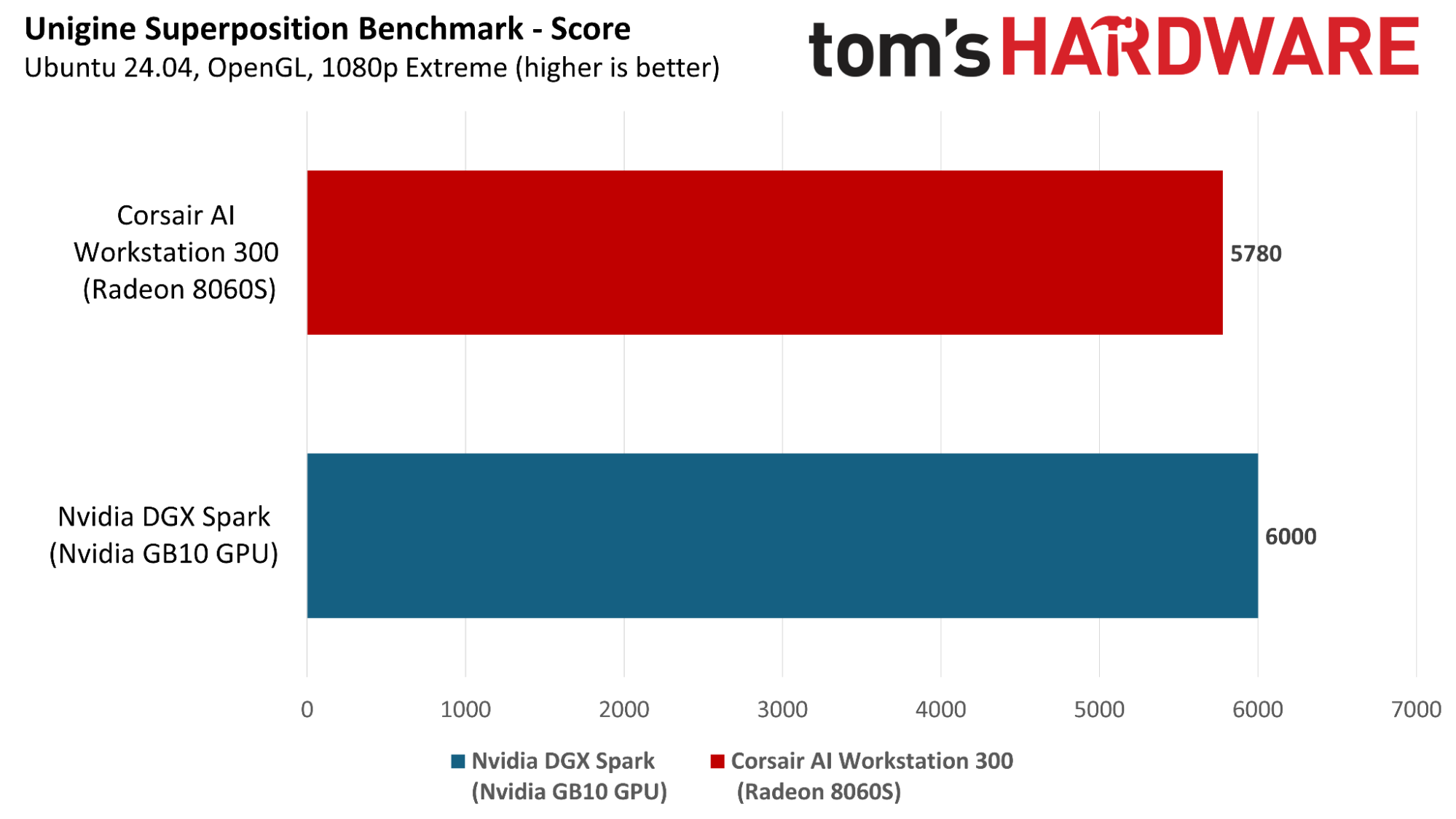

For fun, we also fired up the Unigine Superposition benchmark on both platforms under Ubuntu to get a broad idea of gaming performance.

To be clear, if you’re a gamer first and foremost and don’t need massive amounts of system RAM or VRAM for AI, any PC you can build with even a midrange discrete GPU will vastly outperform both Strix Halo and GB10. But if you’re a local AI enthusiast who wants to game on the side, it’s worth knowing what you’re signing up for.

Despite having 2.4 times as many shader ALUs in its GPU as the Radeon 8060S, the DGX Spark turns in a Superposition score just 4% higher than that of the AI Workstation 300. It’s clear from that result that the limited memory bandwidth of Nvidia’s platform strangles gaming performance. The desktop RTX 5070, which has the same 6144 CUDA cores but much higher memory bandwidth, turns in a Superposition score more than twice as high as that of GB10.

In addition to its roughly equivalent performance, the AI Workstation 300’s main advantage for gaming versus the DGX Spark is that it can boot straight into Windows and get right to it with DirectX titles, all without futzing with x86 emulation layers like FEX. That means you shouldn’t run into show-stopping compatibility issues as we did with Black Myth Wukong on the Spark under Linux.

But as with the DGX Spark, the AI Workstation 300 is an extremely costly way to get a merely passable gaming experience if you don’t strictly need 128GB of RAM to start with. Any traditional gaming PC will be much quicker.

Power, cooling, noise, and thermals

As a highly integrated SoC with mobile roots, the Ryzen AI Max+ 395 has configurable TDPs ranging from 45W to 120W. Corsair exposes them through a front-panel button that switches among three firmware power profiles: Balanced (the default), Max, and Quiet, in that order. Balanced appears to give the SoC a power budget of about 85W, Max around 120W, and Quiet, just 55W.

Each press triggers a brief on-screen indication of the change under Windows, but under Linux, you don’t get any warning that anything has changed, and these settings persist across reboots.

If you casually brush this button, you’re likely to end up in the high-performance Max mode, but you could just as easily end up in the low-performance Quiet mode. Unless you’re paying close attention to the noise levels of the system or have it plugged into a power meter at the wall, you might not notice anything amiss.

It would have been nice if Corsair had put an LED indicator or something on the front of this system to show the active power mode regardless of the OS in use.

In all three power modes, because the system power budget is shared between CPU and GPU, fully loading up both processors at once will reduce performance across both functional units. It’s unlikely that you’ll ever stress the CPU and GPU that way in regular use, but it’s worth understanding that the AI Workstation’s shared power and thermal budget won’t deliver peak CPU and GPU performance with that kind of all-out workload if you do happen to have one.

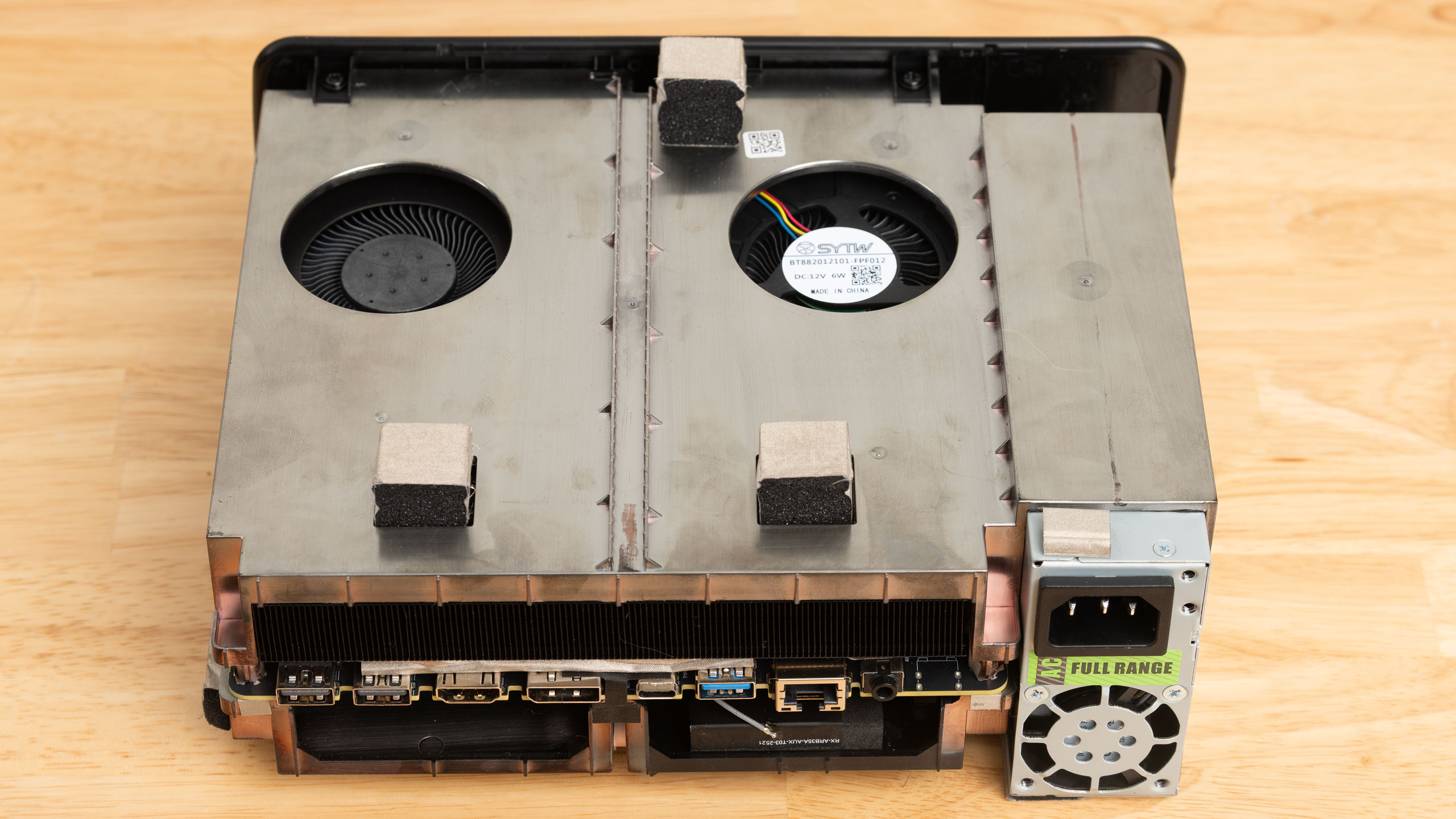

The AI Workstation 300’s chassis and thermal system is more than up to the task of cooling Strix Halo across all of those power modes. Two blower-style fans cool the SoC by moving air through a fin stack that’s connected to the SoC with three heat pipes, and another slim fan draws in air on the other side of the motherboard for the twin NVMe SSDs. The 300W Flex ATX PSU has a tiny fan of its own.

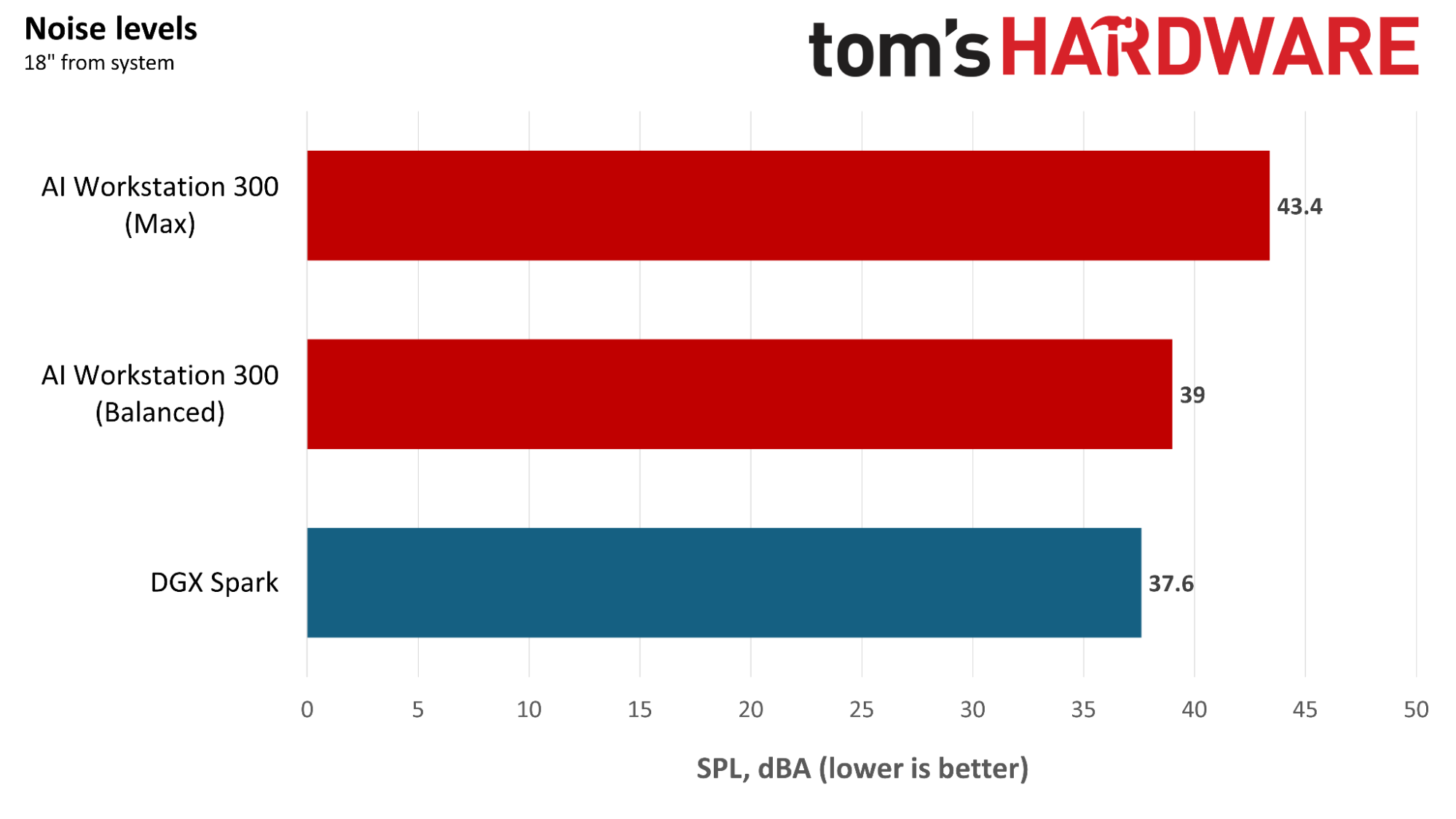

While we appreciate its thorough ventilation, the AI Workstation 300 isn’t the quietest mini-PC around. It’s silent when at 100% idle, but it audibly spins up its fans under light load, and its noise signature is more prominent than the much smaller DGX Spark when either the CPU or GPU are working hard, especially in Max mode.

We measured peak noise levels of just 39 dBA from 18”/46 cm away in the AI Workstation 300’s Balanced mode during ComfyUI image generation, compared to 37.5 dBA from the DGX Spark under the same workload. That rises to 43.4 dBA in Max mode under the same workload, although the extra juice and noise barely makes a difference for time to completion in ComfyUI.

Warranty and support

Since so many Strix Halo boxes feature largely similar feature sets, the post-purchase experience is one way for a company to stand out. Corsair’s warranty and service are a cut above the competition. The company offers a two-year warranty on the AI Workstation 300 with advance replacement options available.

Most lower-cost Strix Halo boxes on Amazon only offer a one-year warranty, and there's no guarantee that you'll be dealing with timely or local tech support in the United States.

Bottom line

As Strix Halo boxes go, Corsair's AI Workstation 300 is a solid one. It's a sleek, well-integrated package with effective cooling that delivers all of the performance potential of the Ryzen AI Max+ 395. Its classy black aluminum shell and its integrated power supply make it more elegant and portable than other ultra-tiny systems in this vein that need an external power brick that can be lost or forgotten on the move.

This system’s 16-core, 32-thread Zen 5 x86 CPU is great for both single-threaded and multithreaded work, and its Radeon 8060S GPU and 128GB of RAM let you explore AI workloads with passable performance while maximizing game and OS compatibility.

The AI Workstation 300 has no trouble keeping Strix Halo cool under load, but we do wish that Corsair’s thermal system were a bit quieter, especially under light usage. There are four fans of varying types and sizes in this system, and it’s noticeably louder under load than the more compact DGX Spark.

There are two big issues facing the AI Workstation 300. One is that Corsair doesn’t control AMD's software quality. AMD still has plenty of work to do to match the stability and maturity of Nvidia’s CUDA stack for AI workloads, and the performance and reliability of partner systems like the AI Workstation 300 are entirely at the mercy of those efforts in the meantime.

ROCm is improving, to be sure, and AMD’s efforts to boost software compatibility have borne fruit of late. Witness the native AMD ComfyUI build that recently became available for just one example. But that improvement is an ongoing process, and it means you might run into stability and compatibility issues that you won’t have on Nvidia platforms.

The second issue facing the AI Workstation 300 is its price tag. When we first started testing this system, the street price of the config we received was just $2000 or so, which made its performance shortcomings against Nvidia’s DGX Spark much easier to forgive. But that price tag has since spiked to $3000.

That spike is likely thanks to AI-induced NAND and DRAM shortages, and the entire PC market is feeling that pain, not just Corsair. But at that price, the AI Workstation 300 lands quite close to some 1TB SSD configs of Nvidia GB10 systems, like Asus’ Ascent GX10 or Gigabyte’s AI Top Atom. Corsair includes much more storage for the money in our tested config than those GB10 boxes, but we don’t think that should make or break one’s buying decision for a local AI workstation.

You can add a 4TB M.2 2242 SSD like Corsair’s own MP600 Micro or MP700 Micro to an Asus Ascent GX10, for example, and still come out $500 ahead of the $4000 DGX Spark Founders Edition if non-volatile storage space is paramount. Even at $500 more than the AI Workstation 300, we’d consider that a cost worth paying if productive AI development is your primary interest.

GB10 is both a better and more consistent performer for local AI than Strix Halo thanks to both its fundamental architecture and the maturity of Nvidia’s CUDA software stack, and you’ll enjoy that superior performance and stability every time you put a GB10 system to work. If GB10 systems become more expensive due to the same RAM and NAND crunch, however, the AI Workstation 300’s competitive position will benefit accordingly.

All told, if you need a compact PC with a potent x86 CPU, a solid enough GPU for both gaming and some AI exploration, and enough RAM to handle giant AI models, along with flexible storage options to cover Windows and Linux installations alike, the AI Workstation 300 is still a fine platform.

But unless you’re looking for those things at the absolute lowest possible cost and are willing to sacrifice some performance and software maturity in the bargain, the arrival of Nvidia’s GB10 makes Strix Halo—and the AI Workstation 300—tough to recommend at its current price.